The Early History Of Smalltalk

Alan C. Kay

Apple Computer

kay2@apple.com.Internet#

Permission to copy without fee all or part of this material is

granted provided that the copies are not made or distributed for

direct commercial advantage, the ACM copyright notice and the

title of the publication and its date appear, and notice is given

that copying is by permission of the Association for Computing

Machinery. To copy otherwise, or to republish, requires a fee

and/or specific permission.

HOPL-II/4/93/MA, USA

© 1993 ACM 0-89791-571-2/93/0004/0069...$1.50

Abstract

Most ideas come from previous ideas. The sixties, particularly in the ARPA

community, gave rise to a host of notions about "human-computer symbiosis"

through interactive time-shared computers, graphics screens and pointing

devices. Advanced computer languages were invented to simulate complex systems

such as oil refineries and semi-intelligent behavior. The soon-to-follow paradigm

shift of modern personal computing, overlapping window interfaces, and

object-oriented design came from seeing the work of the sixties as something

more than a "better old thing." This is, more than a better way: to do mainframe

computing; for end-users to invoke functionality; to make data structures more

abstract. Instead the promise of exponential growth in computing /$/ volume

demanded that the sixties be regarded as "almost a new thing" and to find out

what the actual "new things" might be. For example, one would computer with a

handheld "Dynabook" in a way that would not be possible on a shared mainframe;

millions of potential users meant that the user interface would have to

become a learning environment along the lines of Montessori and Bruner; and

needs for large scope, reduction in complexity, and end-user literacy would

require that data and control structures be done away with in favor of a more

biological scheme of protected universal cells interacting only through messages

that could mimic any desired behavior.

Early Smalltalk was the first complete realization of these new points of view

as parented by its many predecessors in hardware, language and user interface

design. It became the exemplar of the new computing, in part, because we were

actually trying for a qualitative shift in belief structures--a new Kuhnian paradigm

in the same spirit as the invention of the printing press-and thus took

highly extreme positions which almost forced these new styles to be invented.

Table of Contents

- Introduction 2

- I. 1960-66--Early OOP and other formative ideas of the sixties

4

- B220 File System

- SketchPad & Simula

- II 1967-69--The FLEX Machine, an OOP-based personal computer

6

- Doug Englebart and NLS

- Plasma Panel, GRAIL, LOGO, Dynabook

- III. 1970-72--Xerox PARC 12

- KiddiKomp

- miniCOM

- Smalltalk-71

- Overlapping Windows

- Font Editing, Painting, Animation, Music

- Byte Codes

- Iconic Programming

- IV. 1972-76--Xerox PARC: The first real Smalltalk (-72)

17

- The two bets: birth of Smalltalk and Interim Dynabook

- Smalltalk-72 Principles

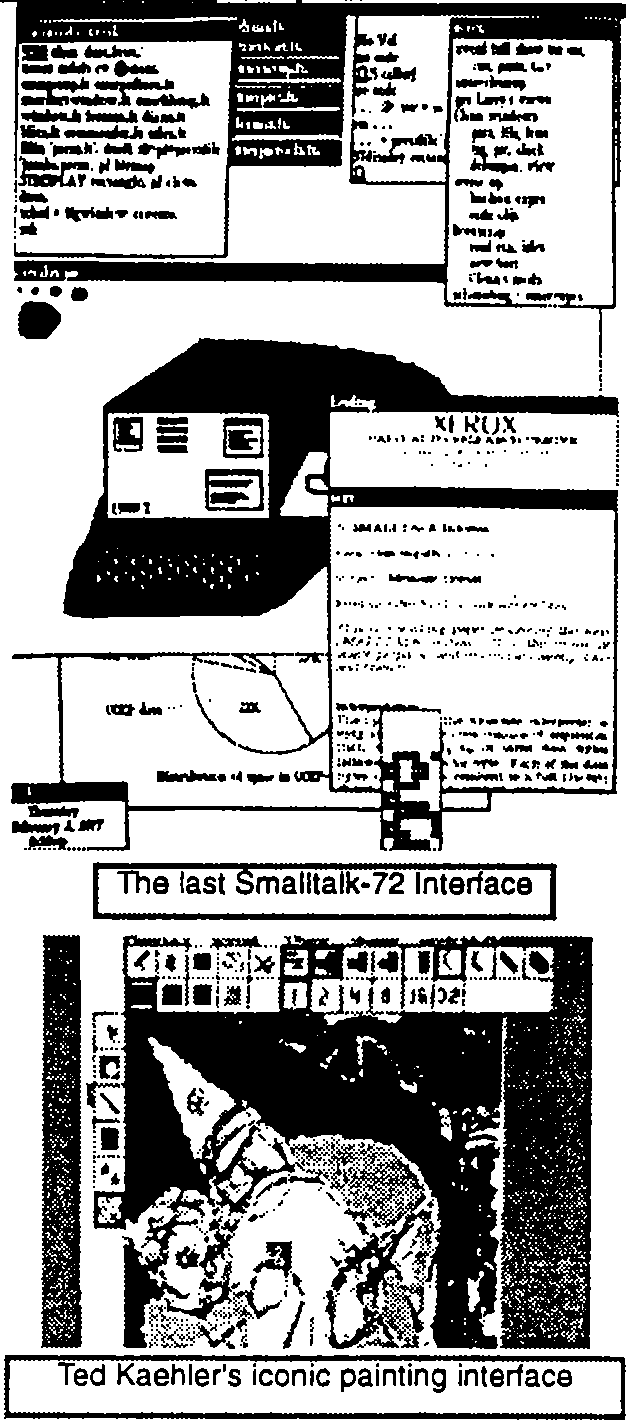

- The Smalltalk User Interface

- Development of the Smalltalk Applications & System

- Evolution of Smalltalk: ST-74, ooze storage management

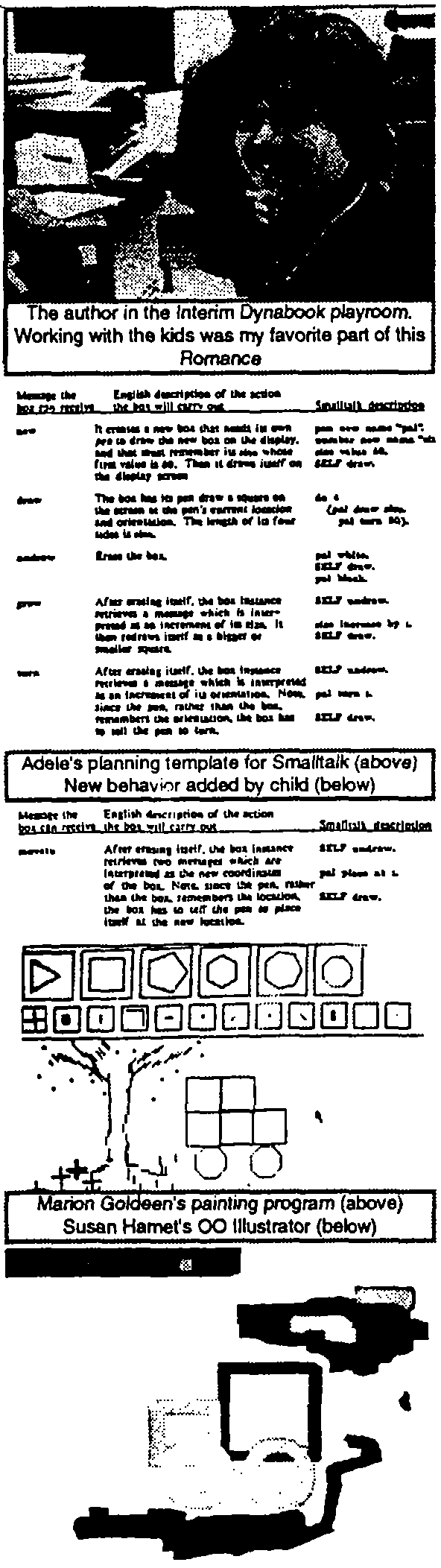

- Smalltalk and Children

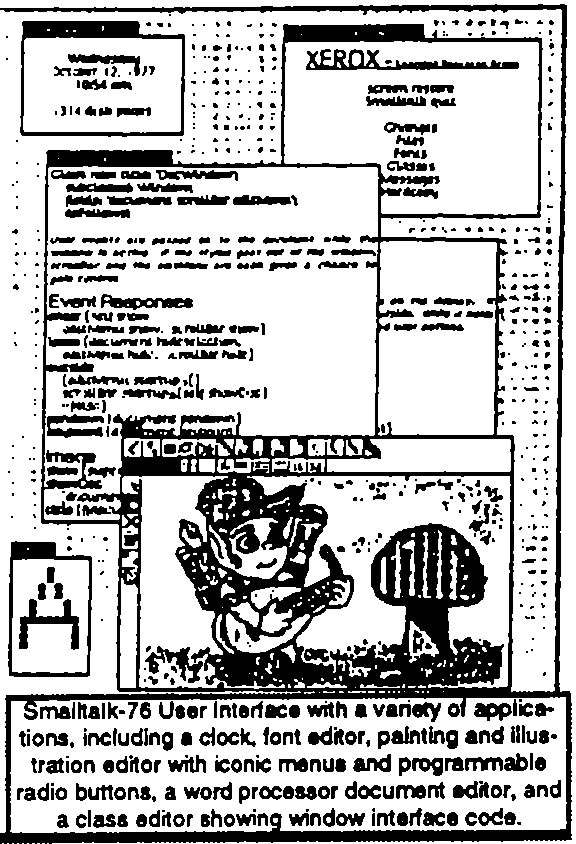

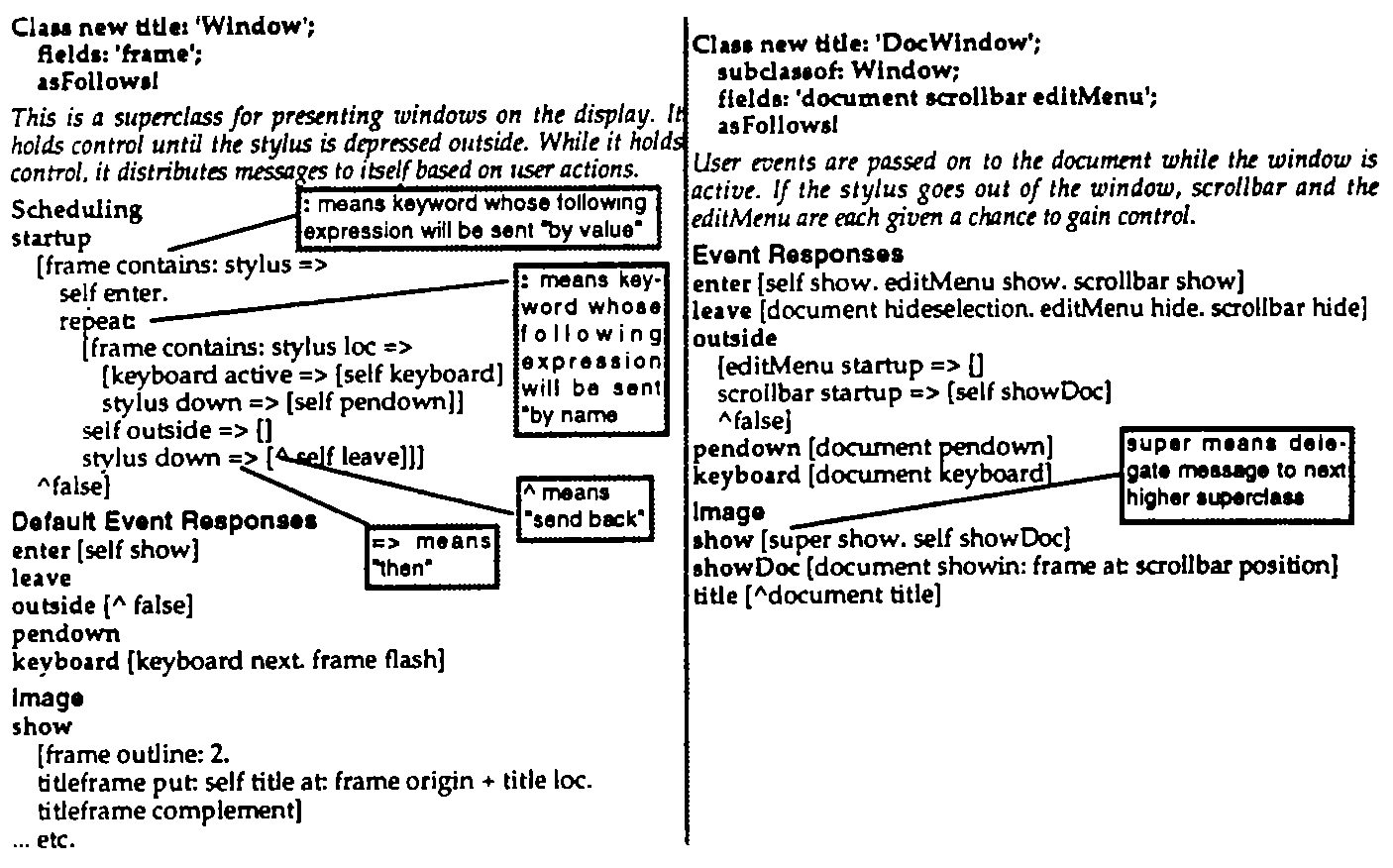

- V. 1976-80--The first modern Smalltalk (-76) 29

- "Let's burn our disk packs"

- The Notetaker

- Smalltalk-76

- Inheritance

- More Troubles With Xerox

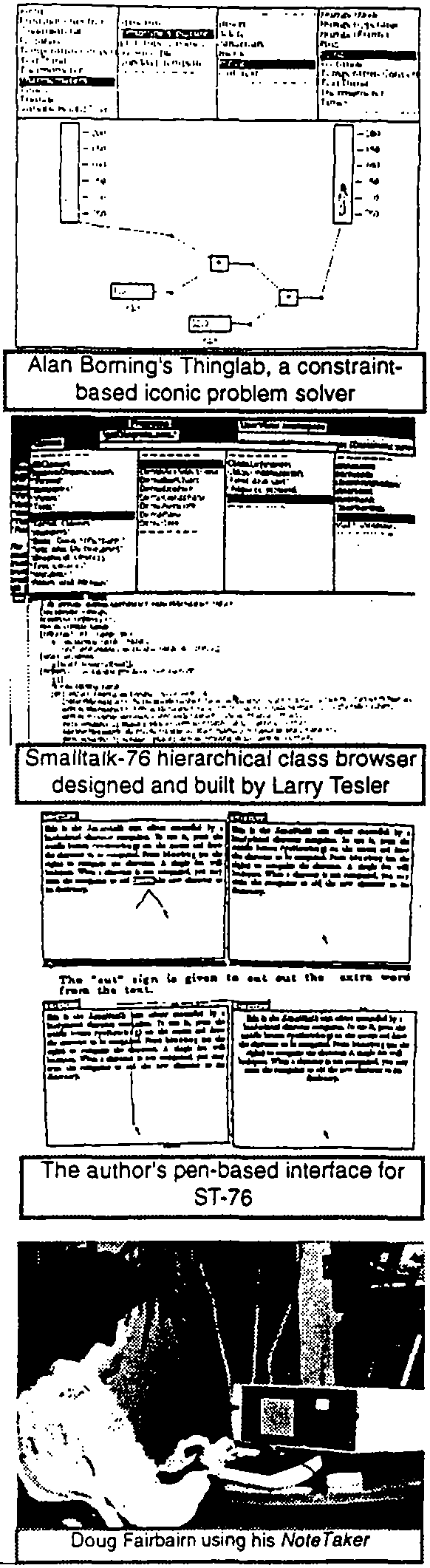

- ThingLab

- Apple Demo

- VI. 1980-83--The release version of Smalltalk (-80) 38

- References Cited in Text 41

- Appendix I: KiddiKomp Memo 45

- Appendix II: Smalltalk-72 Interpreter Design 47

- Appendix III: Acknowledgments 50

- Appendix IV: Event Driven Loop Example 53

- Appendix V: Smalltalk-76 Internal Structures 54

--To Dan Ingalls, Adele Goldberg and the rest of Xerox PARC LRG gang

-- To Dave Evens, Bob Barton, Marvin Minsky, and Seymour Papert

-- To SKETCHPAD, JOSS, LISP, and SIMULA, the 4 great programming conceptions of the sixties

I'm writing this introduction in an airplane at 35,000 feet. On my lap is a five pound

notebook computer--1992's "Interim Dynabook"--by the end of the year it sold for under $700. It

has a flat, crisp, high-resolution bitmap screen, overlapping windows, icons, a pointing device,

considerable storage and computing capacity, and its best software is object-oriented. It has

advanced networking built-in and there are already options for wireless networking.

Smalltalk runs on this system, and is one of the main systems I use for my current work with

children. In some ways this is more than a Dynabook (quantitatively), and some ways not

quite there yet (qualitatively). All in all, pretty much what was in mind during the late sixties.

Smalltalk was part of this larger pursuit of ARPA, and later of Xerox PARC, that I called personal

computing. There were so many people involved in each stage from the research communities

that the accurate allocation of credit for ideas in intractably difficult. Instead, as Bob Barton

liked to quote Goethe, we should "share in the excitement of discover without vain attempts

to claim priority."

I will try to show where most of the influences came from and how they were transformed

in the magnetic field formed by the new personal computing metaphor. It was the attitudes as

well as the great ideas of the pioneers that helped Smalltalk get invented. Many of the people I

admired most at this time--such as Ivan Sutherland, Marvin Minsky, Seymour Papert, Gordon

Moore, Bob Barton, Dave Evans, Butler Lampson, Jerome Bruner, and others--seemed to have

a splendid sense that their creations, though wonderful by relative standards, were not near to

the absolute thresholds that had to be crossed. Small minds try to form religions, the great ones

just want better routes up the mountain. Where Newton said he saw further by standing on the

shoulders of giants, computer scientists all too often stand on each other's toes. Myopia is

still a problem where there are giants' shoulders to stand on--"outsight" is better than

insight--but it can be minimized by using glasses whose lenses are highly sensitive to

esthetics and criticism.

Programming languages can be categorized in a number of ways: imperative, applicative,

logic-based, problem-oriented, etc. But they all seem to be either an "agglutination of features"

or a "crystallization of style." COBOL, PL/1, Ada, etc., belong to the first kind; LISP, APL--

and Smalltalk--are the second kind. It is probably not an accident that the agglutinative languages

all seem to have been instigated by committees, and the crystallization languages by a single person.

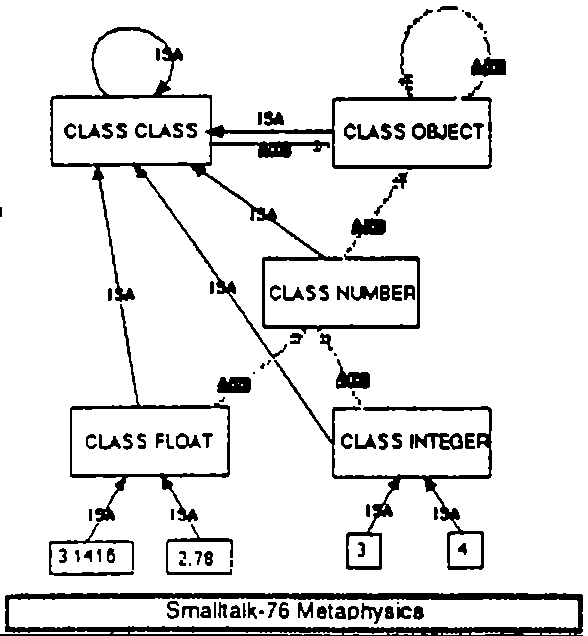

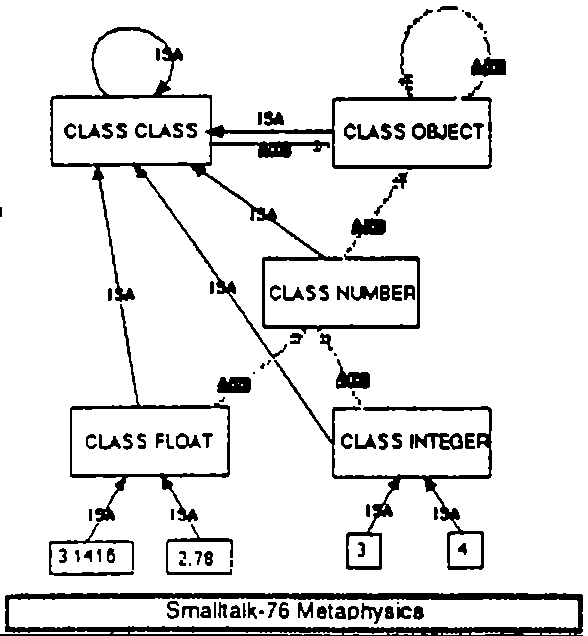

Smalltalk's design--and existence--is due to the insight that everything we can describe can be

represented by the recursive composition of a single kind of behavioral building block that hides its

combination of state and process inside itself and can be dealt with only through the exchange of

messages. Philosophically, Smalltalk's objects have much in common with the monads of Leibniz and

the notions of 20th century physics and biology. Its way of making objects is quite Platonic in that

some of them act as idealisations of concepts--Ideas--from which manifestations can be created. That

the Ideas are themselves manifestations (of the Idea-Idea) and that the Idea-Idea is a-kind-of

Manifestation-Idea--which is a-kind-of itself, so that the system is completely self-describing--

would have been appreciated by Plato as an extremely practical joke [Plato].

In computer terms, Smalltalk is a recursion on the notion of computer itself. Instead of dividing

"computer stuff" into things each less strong than the whole--like data structures, procedures, and

functions which are the usual paraphernalia of programming languages--each Smalltalk object is a

recursion on the entire possibilities of the computer. Thus its semantics are a bit like having thousands

and thousands of computer all hooked together by a very fast network. Questions of concrete representation

can thus be postponed almost indefinitely because we are mainly concerned that the computers

behave appropriately, and are interested in particular strategies only if the results are off or

come back too slowly.

Though it has noble ancestors indeed, Smalltalk's contribution is anew design paradigm--which I

called object-oriented--for attacking large problems of the professional programmer, and making

small ones possible for the novice user. Object-oriented design is a successful attempt to qualitatively

improve the efficiency of modeling the ever more complex dynamic systems and user relationships

made possible by the silicon explosion.

"We would know what they thought

when the did it."

--Richard Hamming

"Memory and imagination are but two

words for the same thing."

--Thomas Hobbes

In this history I will try to be true to Hamming's request as moderated by Hobbes' observation. I

have had difficulty in previous attempts to write about Smalltalk because my emotional involvement

has always been centered on personal computing as an amplifier for human reach--rather than

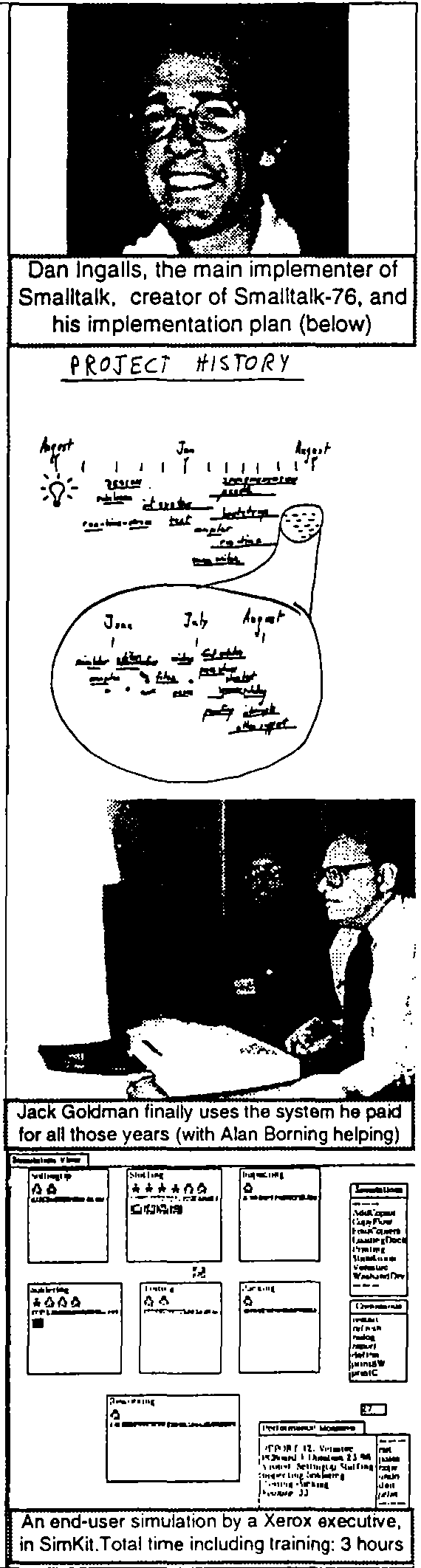

programming system design--and we haven't got there yet. Though I was the instigator and original

designer of Smalltalk, it has always belonged more to the people who make it work and got it out the

door, especially Dan Ingalls and Adele Goldberg. Each of the LRGers contributed in deep and remarkable

ways to the project, and I wish there was enough space to do them all justice. But I think all of

us would agree that for most of the development of Smalltalk, Dan was the central figure.

Programming is at heart a practical art in which real things are built, and a real implementation thus

has to exist. In fact many if not most languages are in use today not because they have any real merits

but because of their existence on one or more machines, their ability to be bootstrapped, etc. But Dan

was far more than a great implementer, he also became more and more of the designer, not just of the

language but also of the user interface as Smalltalk moved into the practical world.

Here, I will try to center focus on the events leading up to Smalltalk-72 and its transition to its

modern form as Smalltalk-76. Most of the ideas occurred here, and many of the earliest stages of OOP

are poorly documented in references almost impossible to find.

This history is too long, but I was amazed at how many people and systems that had an influence

appear only as shadows or not at all. I am sorry not to be able to say more about Bob Balzer, Bob

Barton, Danny Bobrow, Steve Carr, Wes Clark, Barbara Deutsch, Peter Deutsch, Bill Duvall, Bob

Flegal, Laura Gould, Bruce Horn, Butler Lampson, Dave Liddle, William Newman, Bill Paxton,

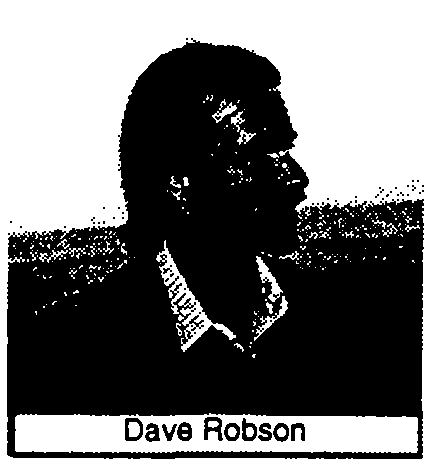

Trygve Reenskaug, Dave Robson, Doug Ross, Paul Rovner, Bob Sproull, Dan Swinehart, Bert

Sutherland, Bob Taylor, Warren Teitelman, Bonnie Tennenbaum, Chuck Thacker, and John Warnock.

Worse, I have omitted to mention many systems whose design I detested, but that generated considerable

useful ideas and attitudes in reaction. In other words, histories" should not be believed very

seriously but considered as "FEEBLE GESTURES OFF" done long after the actors have departed the stage.

Thanks to the numerous reviewers for enduring the many drafts they had to comment on. Special

thanks to Mike Mahoney for helping so gently that I heeded his suggestions and so well that they

greatly improved this essay--and to Jean Sammet, an old old friend, who quite literally frightened

me into finishing it--I did not want to find out what would happen if I were late. Sherri McLoughlin

and Kim Rose were of great help in getting all the materials together.

Though OOP came from many motivations, two were central. The large scale one was to find a better

module scheme for complex systems involving hiding of details, and the small scale one was to

find a more flexible version of assignment, and then to try to eliminate it altogether. As with most

new ideas, it originally happened in isolated fits and starts.

New ideas go through stages of acceptance, both from within and without. From within, the

sequence moves from "barely seeing" a pattern several times, then noting it but not perceiving its

"cosmic" significance, then using it operationally in several areas, then comes a "grand rotation" in

which the pattern becomes the center of a new way of thinking, and finally, it turns into the same

kind of inflexible religion that it originally broke away from. From without, as Schopenhauer noted,

the new idea is first denounced as the work of the insane, in a few years it is considered obvious and

mundane, and finally the original denouncers will claim to have invented it.

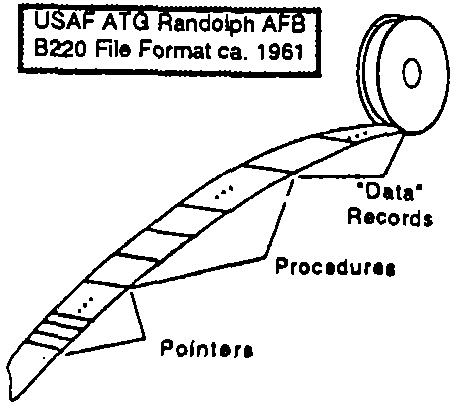

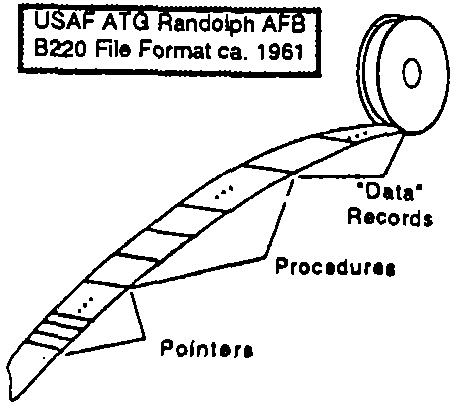

True to the stages, I "barely saw" the idea several times ca. 1961 while a programmer in the Air

Force. The first was on the Burroughs 220 in the form of a style for

transporting files from one Air Training Command installation to

another. There were no standard operating systems or file formats

back then, so some (t this day unknown) designer decided to

finesse the problem by taking each file and dividing it into three

parts. The third part was all of the actual data records of arbitrary

size and format. The second part contained the B220 procedures

that knew how to get at records and fields to copy and update the

third part. And the first part was an array or relative pointers

into entry points of the procedures in the second part (the initial pointers

were in a standard order representing standard meanings).

Needless to say, this was a great idea, and was used in many subsequent systems until the enforced

use of COBOL drove it out of existence.

Force. The first was on the Burroughs 220 in the form of a style for

transporting files from one Air Training Command installation to

another. There were no standard operating systems or file formats

back then, so some (t this day unknown) designer decided to

finesse the problem by taking each file and dividing it into three

parts. The third part was all of the actual data records of arbitrary

size and format. The second part contained the B220 procedures

that knew how to get at records and fields to copy and update the

third part. And the first part was an array or relative pointers

into entry points of the procedures in the second part (the initial pointers

were in a standard order representing standard meanings).

Needless to say, this was a great idea, and was used in many subsequent systems until the enforced

use of COBOL drove it out of existence.

The second barely-seeing of the idea came just a little later when ATC decided to replace the 220

with a B5000. I didn't have the perspective to really appreciate it at the time, but I did take note of its

segmented storage system, its efficiency of HLL compilation and byte-coded execution, its automatic

mechanisms for subroutine calling and multiprocess switching, its pure code for sharing, its protected

mechanisms, etc. And, I saw that the access to its Program Reference Table corresponded to the

220 file system scheme of providing a procedural interface to a module. However, my big hit from

this machine at this time was not the OOP idea, but some insights into HLL translation and evaluation.

[Barton, 1961] [Burroughs, 1961]

After the Air Force, I worked my way through the rest of college by programming mostly retrieval

systems for large collections of weather data for the National

Center for Atmospheric Research. I got interested in simulation

in general--particularly of one machine by another--but aside

from doing a one-dimensional version of a bit-field block transfer

(bitblt) on a CDC 6600 to simulate word sizes of various

machines, most of my attention was distracted by school, or I

should say the theatre at school. While in Chippewa Falls helping

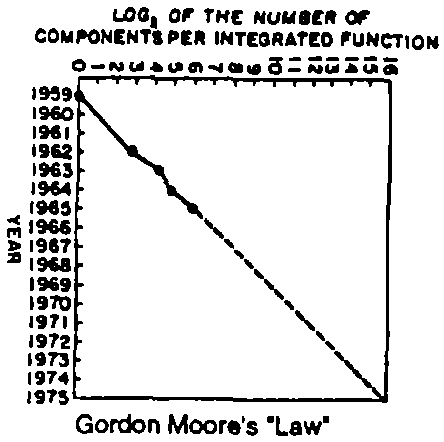

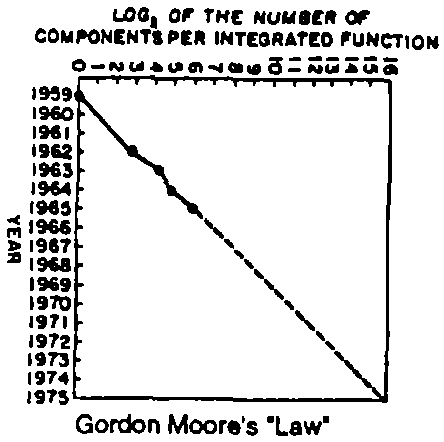

to debug the 6600, I read an article by Gordon Moore which

predicted that integrated silicon on chips was going to exponentially

improve in density and cost over many years [Moore 65].

At the time in 1965, standing next to the room-sized freon-cooled

10 MIP 6600, his astounding predictions had little projection

into my horizons.

systems for large collections of weather data for the National

Center for Atmospheric Research. I got interested in simulation

in general--particularly of one machine by another--but aside

from doing a one-dimensional version of a bit-field block transfer

(bitblt) on a CDC 6600 to simulate word sizes of various

machines, most of my attention was distracted by school, or I

should say the theatre at school. While in Chippewa Falls helping

to debug the 6600, I read an article by Gordon Moore which

predicted that integrated silicon on chips was going to exponentially

improve in density and cost over many years [Moore 65].

At the time in 1965, standing next to the room-sized freon-cooled

10 MIP 6600, his astounding predictions had little projection

into my horizons.

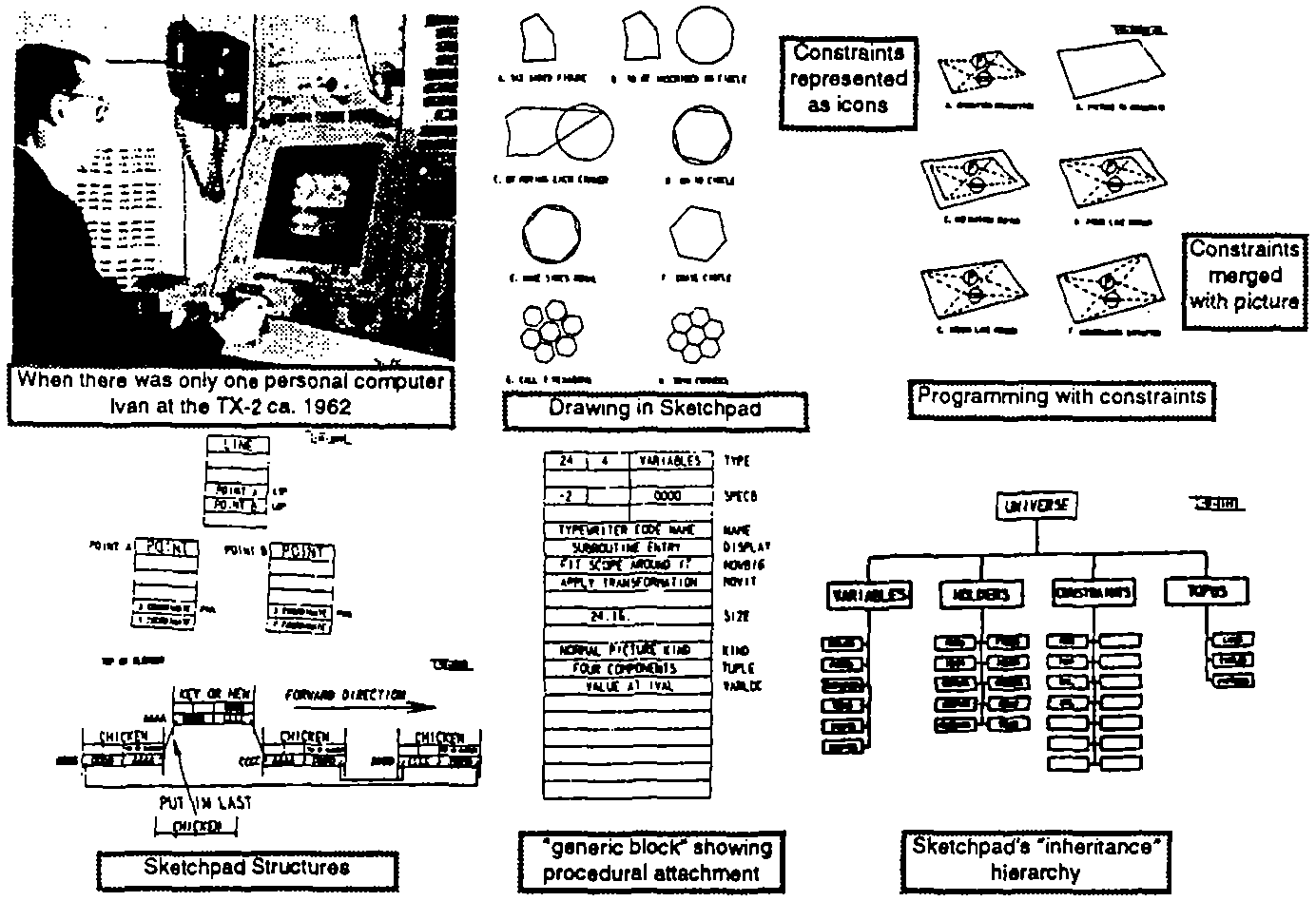

Sketchpad and Simula

Through a series of flukes, I wound up in graduate school at the University of Utah in the Fall of

1966, "knowing nothing." That is to say, I had never heard of ARPA or its projects, or that Utah's main

goal in this community was to solve the "hidden line" problem in 3D graphics, until I actually

walked into Dave Evans' office looking for a job and a desk. On Dave's desk was a foot-high stack of

brown covered documents, one of which he handed to me: "Take this and read it."

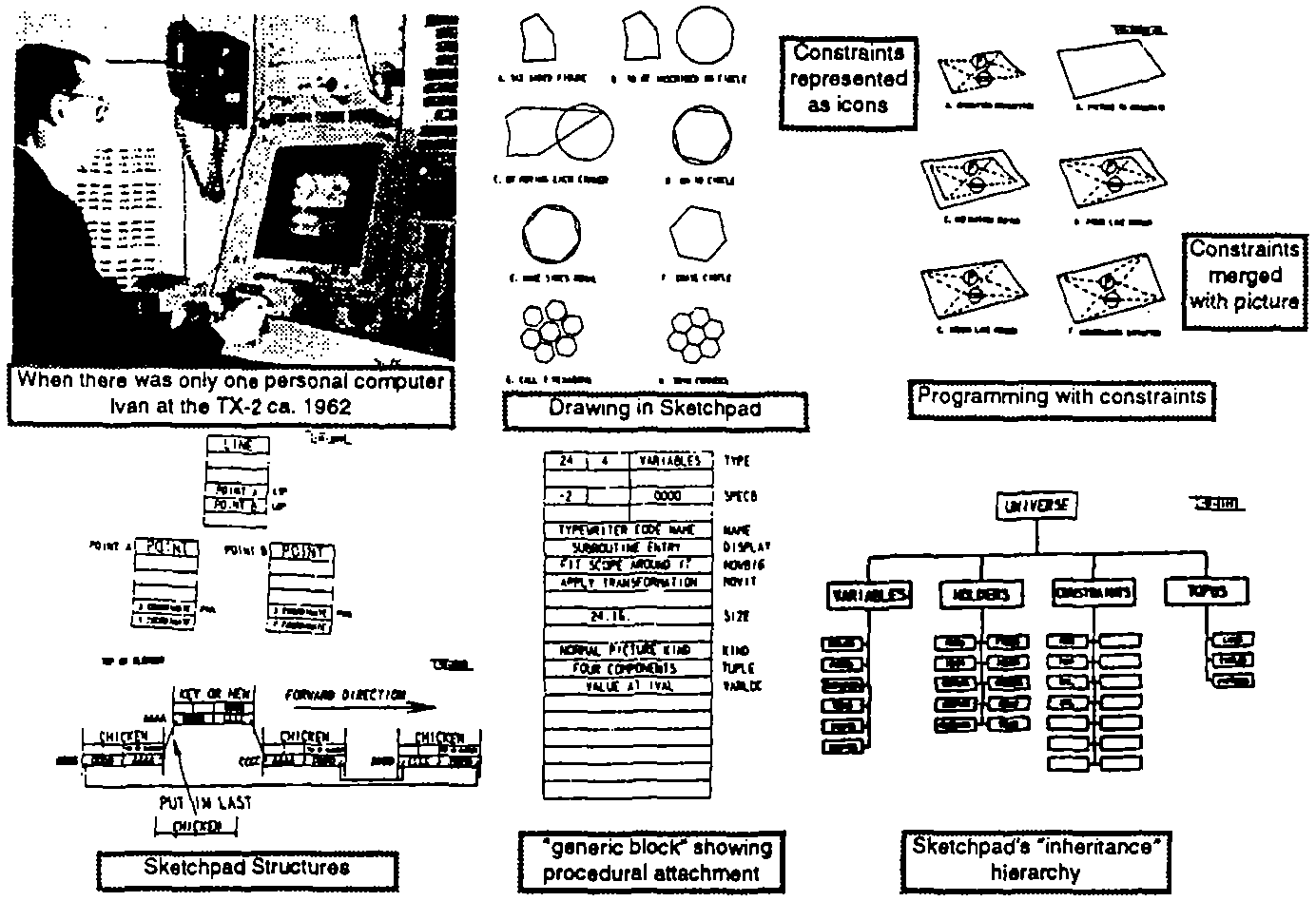

Every newcomer got one. The title was "Sketchpad: A man-machine graphical communication

system" [Sutherland, 1963]. What it could do was quite remarkable, and completely foreign to any use of

a computer I had ever encountered. The three big ideas that were easiest to grapple with were: it was

the invention of modern interactive computer graphics; things were described by making a "master

drawing" that could produce "instance drawings"; control and dynamics were supplied by

"constraints," also in graphical form, that could be applied to the masters to shape an inter-related parts.

Its data structures were hard to understand--the only vaguely familiar construct was the embedding

of pointers to procedures and using a process called reverse indexing to jump through them to

routines, like the 22- file system [Ross, 1961]. It was the first to have clipping and zooming

windows--one "sketched" on a virtual sheet about 1/3 mile square!

Head whirling, I found my desk. ON it was a pile of tapes and listings, and a note: "This is the

Algol for the 1108. It doesn't work. Please make it work." The latest graduate student gets the latest

dirty task.

The documentation was incomprehensible. Supposedly, this was the Case-Western Reserve 1107

Algol--but it had been doctored to make a language called Simula; the documentation read like

Norwegian transliterated into English, which in fact it was. There were uses of words like activity and

process that didn't seem to coincide with normal English usage.

Finally, another graduate student and I unrolled the program listing 80 feet down the hall and

crawled over it yelling discoveries to each other. The weirdest part was the storage allocator, which

did not obey a stack discipline as was usual for Algol. A few days later, that provided the clue. What

Simula was allocating were structures very much like the instances of Sketchpad. There wee descriptions

that acted like masters and they could create instances, each of which was an independent entity.

What Sketchpad called masters and instances, Simula called activities and processes. Moreover,

Simula was a procedural language for controlling Sketchpad-like objects, thus having considerably

more flexibility than constraints (though at some cost in elegance) [Nygaard, 1966, Nygaard, 1983].

This was the big hit, and I've not been the same since. I think the reason the hit had such impact

was that I had seen the idea enough times in enough different forms that the final recognition was in

such general terms to have the quality of an epiphany. My math major had centered on abstract algebras

with their few operations generally applying to many structures. My biology manor had focused

on both cell metabolism and larger scale morphogenesis with its notions of simple mechanisms

controlling complex processes and one kind of building block able to differentiate into all needed building

blocks. The 220 file system, the B5000, Sketchpad, and finally Simula, all used the same idea

for different purposes. Bob Barton, the main designer of the B5000 and a professor at Utah had said in

one of his talks a few days earlier: "The basic principal of recursive design is to make the parts have

the same power as the whole." For the first time I thought of the whole as the entire computer and

wondered why anyone would want to divide it up into weaker things called data structures and

procedures. Why not divide it up into little computers, as time sharing was starting to? But not in

dozens. Why not thousands of them, each simulating a useful structure?

I recalled the monads of Leibniz, the "dividing nature at its joints" discourse of Plato, and other

attempts to parse complexity. Of course, philosophy is about opinion and engineering is about deeds,

with science the happy medium somewhere in between. It is not too much of an exaggeration to say

that most of my ideas from then on took their roots from Simula--but not as an attempt to improve

it. It was the promise of an entirely new way to structure computations that took my fancy. As it

turned out, it would take quite a few years to understand how to use the insights and to devise

efficient mechanisms to execute them.

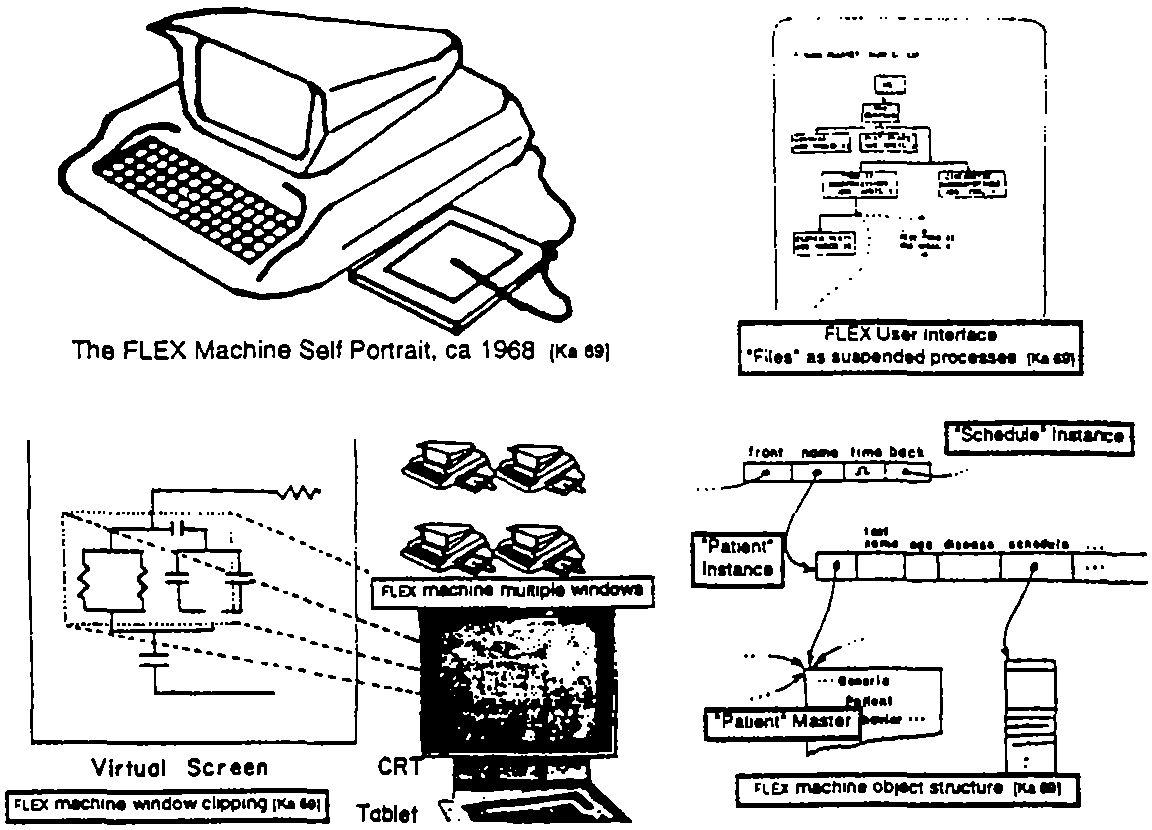

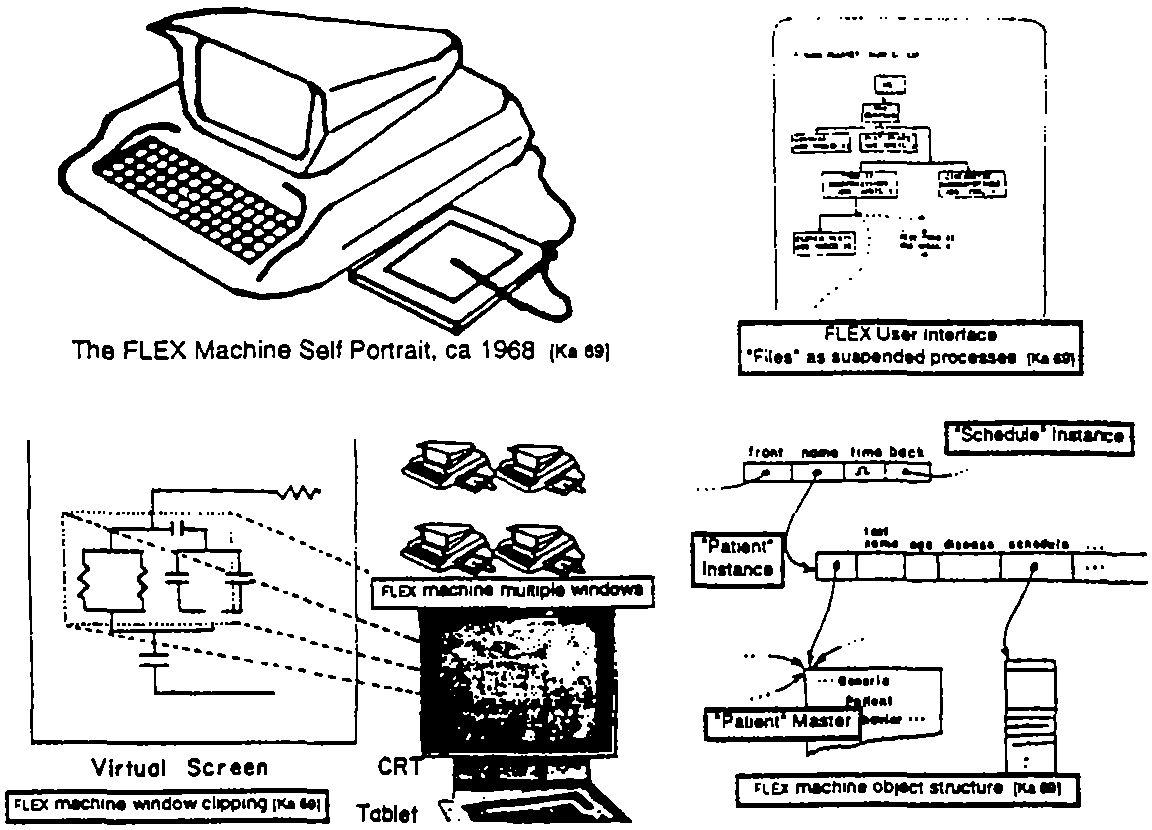

Dave Evans was not a great believer in graduate school

as an institution. As with many of the ARPA "contracts"

he wanted his students to be doing "real things"; they

should move through graduate school as quickly as possible;

and their theses should advance the state of the art.

Dave would often get consulting jobs for his students, and

in early 1967, he introduced me to Ed Cheadle, a friendly

hardware genius at a local aerospace company who was

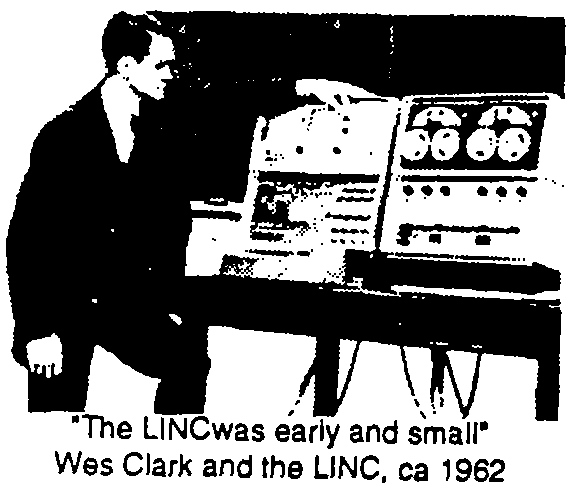

working on a "little machine." It was not the first personal

computer--that was the LINC of Wes Clark--but Ed wanted

it for noncomputer professionals, in particular, he wanted

to program it in a higher level language, like BASIC. I

said; "What about JOSS? It's nicer." He said: "Sure, whatever

you think," and that was the start of a very pleasant

collaboration we called the FLEX machine. As we jot deeper into the design, we realized that we wanted

to dynamically simulate and extend, neither of which JOSS (or any existing language that I knew of)

was particularly good at. The machine was too small for Simula, so that was out. The beauty of JOSS

was the extreme attention of its design to the end-user--in this respect, it has not been

surpassed [Joss 1964, Joss 1978]. JOSS was too slow for serious computing (but cf. Lampson 65), did not

have real procedures, variable scope, and so forth. A language that looked a little like JOSS but had

considerably more potential power was Wirth's EULER [Wirth 1966]. This was a generalization of Algol

along lines first set forth by van Wijngaarden [van Wijngaarden 1963] in which types were discarded,

different features consolidated, procedures were made into first class objects, and so forth. Actually

kind of LISP like, but without the deeper insights of LISP.

But EULER was enough of "an almost new thing" to suggest that the same techniques be applied to

simply Simula. The EULER compiler was a part of its formal definition and made a simple conversion

into 85000-like byte-codes. This was appealing because it s suggested the Ed's little machine could

run byte-codes emulated in the longish slow microcode that was then possible. The EULER compiler

however, was tortuously rendered in an "extended precedence" grammar that actually required

concessions in the language syntax (e.g. "," could only be used in one role because the precedence

scheme had no state space). I initially adopted a bottom-up Floyd-Evans parser (adapted from Jerry

Feldman's original compiler-compiler [Feldman 1977]) and later went to various top-down schemes,

several of them related to Shorre's META II [Shorre 1963] that eventually put the translater in the name

space of the language.

The semantics of what was now called the FLEX language needed to be influenced more by Simula

than by Algol or EULER. But it was not completely clear how. Nor was it clear how the users should

interact with the system. Ed had a display (for graphing, etc.) even on his first machine, and the LINC

had a "glass teletype," but a Sketchpad-like system seemed far beyond the scope that we could

accomplish with the maximum of 16k 16-bit words that our cost budget allowed.

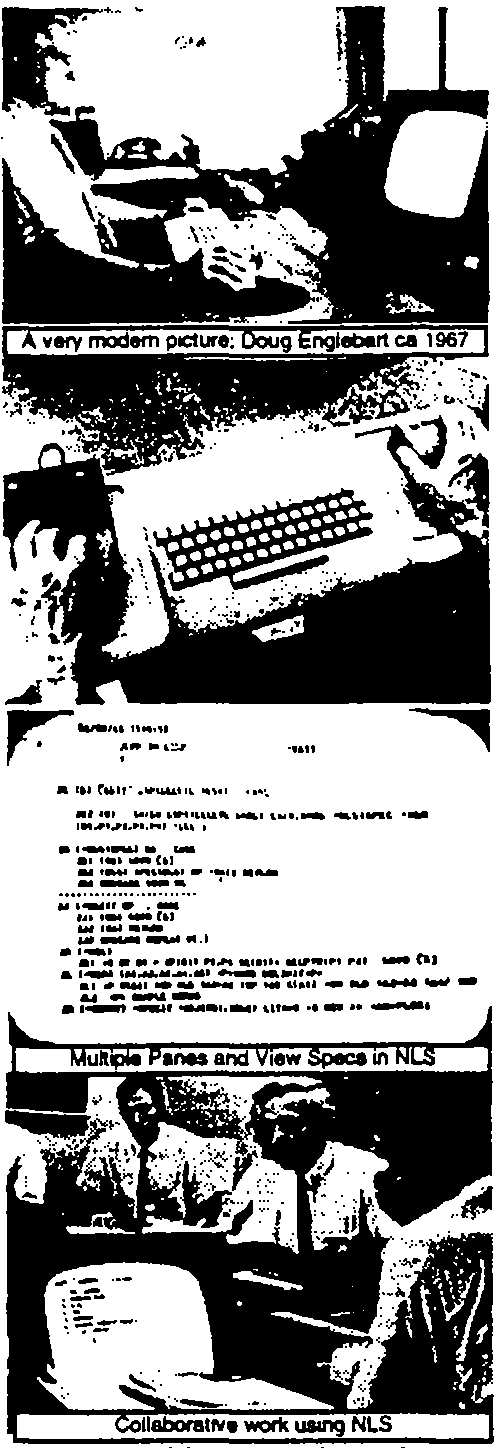

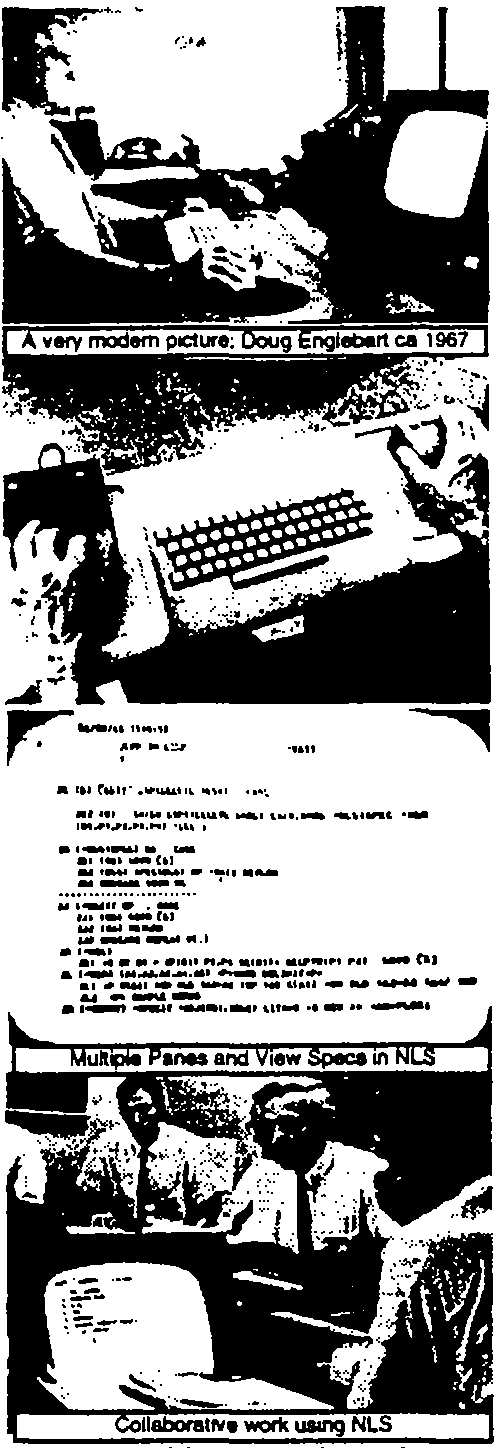

Doug Engelbart and NLS

This was in early 1967, and while we were pondering

the FLEX machine, Utah was visited by Doug Engelbart. A

prophet of Biblical dimensions, he was very much one of

the fathers of what on the FLEX machine I had started to

call "personal computing." He actually traveled with his

own 16mm projector with a remote control for starting a

and stopping it to show what was going on (people were

not used to seeing and following cursors back then). His

notion on the ARPA dream was that the destiny of Online

Systems (NLS) was the "augmentation of human

intellect" via an interactive vehicle navigating through

"thought vectors in concept space." What his system

could do then--even by today's standards--was incredible.

Not just hypertext, but graphics, multiple panes, efficient

navigation and command input, interactive collaborative

work, etc. An entire conceptual world and world

view [Engelbart 68]. The impact of this vision was to produce

in the minds of those who were "eager to be

augmented" a compelling metaphor of what interactive computing should be like, and I immediately adopted

many of the ideas for the FLEX machine.

In the midst of the ARPA context of human-computer

symbiosis and in the presence of Ed's "little machine",

Gordon Moore's "Law" again came to mind, this time

with great impact. For the first time I made the leap of

putting the room-sized interactive TX-2 or even a 10 MIP

6600 on a desk. I was almost frightened by the implications;

computing as we knew it couldn't survive--the

actual meaning of the word changed--it must have been

the same kind of disorientation people had after reading

Copernicus and first looked up from a different Earth to a

different Heaven.

Instead of at most a few thousand institutional mainframes

in the world--even today in 1992 it is estimated

that there are only 4000 IBM mainframes in the entire world--and

at most a few thousand users trained for

each application, there would be millions of personal

machines and users, mostly outside of direct institutional

control. Where would the applications and training come

from? Why should we expect an applications programmer

to anticipate the specific needs of a particular one of

the millions of potential users? An extensional system

seemed to be called for in which the end-users would do

most of the tailoring (and even some of the direct

constructions) of their tools. ARPA had already figured this out

in the context of their early successes in time-sharing.

Their larger metaphor of human-computer symbiosis

helped the community avoid making a religion of their subgoals and kept them focused on the

abstract holy grail of "augmentation."

One of the interested features of NLS was that its user interface was a parametric and could be

supplied by the end user in the form of a "grammar of interaction given in their compiler-compiler

TreeMeta. This was similar to William Newman's early "Reaction Handler" [Newman 66] work in

specifying interfaces by having the end-user or developer construct through tablet and stylus an

iconic regular expression grammar with action procedures at the states (NLS allowed embeddings via

its context free rules). This was attractive in many ways, particularly William's scheme, but to me

there was a monstrous bug in this approach. Namely, these grammars forced the user to be in a

system state which required getting out of before any new kind of interaction could be done. In hierarchical

menus or "screens" one would have to backtrack to a master state in order to go somewhere

else. What seemed to be required were states in which there was a transition arrow to every other

state--not a fruitful concept in formal grammar theory. In other words, a much "flatter" interface

seemed called for--but could such a thing be made interesting and rich enough to be useful?

Again, the scope of the FLEX machine was too small for a miniNLS, and we were forced to find

alternate designs that would incorporate some of the power of the new ideas, and in some cases to

improve them. I decided that Sketchpad's notion of a general window that viewed a larger virtual

world was a better idea than restricted horizontal panes and with Ed came up with a clipping

algorithm very similar to that under development at the same time by Sutherland and his students at

Harvard for the 3D "virtual reality" helmet project [Sutherland 1968].

Object references were handled on the FLEX machine as a generalization of B5000 descriptors.

Instead of a few formats for referencing numbers, arrays, and procedures, a FLEX descriptor

contained two pointers: the first to the "master" of the object, and the second to the object instances (later

we realized that we should put the master pointer in the instance to save space). A different method

was taken for handling generalized assignment. The B5000 used l-values and r-values [Strachey*]

which worked for some cases but couldn't handle more complex objects. For example: a[55] := 0 if a was a

sparse array whose default element was - would still generate an element in the array because

:= is an "operator" and a[55] is dereferenced into an l-value before anyone gets to see that the r-value

is the default element, regardless of whether a is an array or a procedure fronting for an array. What

is needed is something like: a(55 := 0), which can look at all relevant operands before any store is

made. In other words, := is not an operator, but a kind of a index that can select a behavior from a

complex object. It took me a remarkably long time to see this, partly I think because one has to invert

the traditional notion of operators and functions, etc., to see that objects need to privately own all of

their behaviors: that objects are a kind of mapping whose values are its behaviors. A book on logic by

Carnap [Ca *] helped by showing that "intentional" definitions covered the same territory as the

more traditional extensional technique and were often more intuitive and convenient.

As in Simula, a coroutining control structure [Conway, 1963] was used as a way to suspend and

resume objects. Persistent objects like files and documents were treated as suspended processes and

were organized according to their Algol-like static variable scopes. These were shown on the screen

and could be opened by pointing at them. Coroutining was also used as a control structure for looping.

A single operator while was used to test the generators which returned false when unable to

furnish a new value. Booleans were used to link multiple generators. So a "for-type" loop would be

written as:

while i <= 1 to 30 by 2 ^ j <= 2 to k by 3 do j<-j * i;

where the ... to ... by ... was a kind of coroutine object. Many of these ideas were reimplemented in a

stronger style in Smalltalk later on.

![FLEX when statement [Ka 69]](flexstatement.png)

Another control structure of interest in FLEX was a kind of

event-driven "soft interrupt" called when. Its boolean

expression was compiled into a "tournement soft" tree that

cached all possible intermediate results. The relevant variables

were threaded through all of the sorting trees in all of

the whens so that any change only had to compute through

the necessary parts of the booleans. The efficiency was very

high and was similar to the techniques now used for

spreadsheets. This was an embarrassment of riches with

difficulties often encountered in event-driven systems.

Namely, it was a complex task to control the context of just when the whens should be sensitive. Part

of the boolean expression had to be used to check the contexts, where I felt that somehow the

structure of the program should be able to set and unset the event drivers. This turned out to beyond the

scope of the FLEX system and needed to wait for a better architecture.

Still, quite a few of the original FLEX ideas in their proto-object form did turn out to be small

enough to be feasible on the machine. I was writing the first compiler when something unusual

happened: the Utah graduate students got invited to the ARPA contractors meeting held that year at Alta,

Utah. Towards the end of the three days, Bob Taylor, who had succeeded Ivan Sutherland as head of

ARPA-IPTO asked the graduate students (sitting in a ring around the outside of the 20 or so contractors)

if they had any comments. John Warnock raised his hand and pointed out that since the ARPA

grad students would all soon be colleagues (and since we did all the real work anyway), ARPA should

have a contractors-type meeting each user for the grad students. Taylor thought this was a great idea

and set it up for the next summer.

Another ski-lodge meeting happened in Park City later that spring. The general topic was education

and it was the first time I heard Marvin Minsky speak. He put forth a terrific diatribe against

traditional education methods, and from him I heard the ideas of Piaget and Papert for the first time.

Marvin's talk was about how we think about complex situations and why schools are really bad

places to learn these skills. He didn't have to make any claims about computer+kids to make his

point. It was clear that education and learning had to be rethought in the light of 20th century

cognitive psychology and how good thinkers really think. Computing enters as a new representation system

with new and useful metaphors for dealing with complexity, especially of systems [Minsky 70].

For the summer 1968 ARPA grad students meeting at Allerton House in Illinois, I boiled all the mechanisms in the FLEX machine down into one 2'x3' chart. This included

all the "object structures." the compiler, the byte-code

interpreter, i/o handlers, and a simple display editor

for text and graphics. The grad students were a

distinguished group that did indeed become colleagues in

subsequent years. My FLEX machine talk was a success,

but the big whammy for me came during a tour of U of Illinois

whee I saw a 1" square lump of class and neon gas in which individual spots would light up on

command--it was the first flat-panel display. I spent the rest

of the conference calculating just when the silicon of the

FLEX machine could be put on the back of the display. According to Gordon Moore's "Law", the

answer seemed to be sometime in the late seventies or early eighties. A long time off--it seemed to

long to worry much about it then.

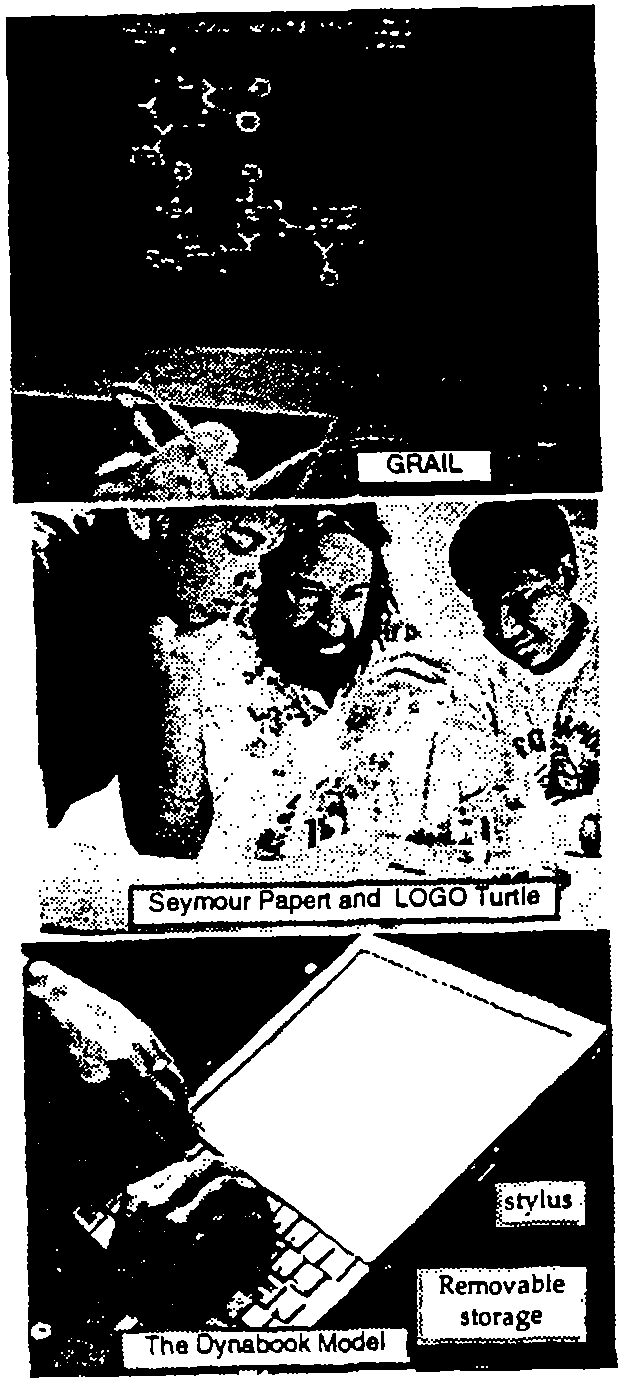

But later that year at RAND I saw a truly beautiful system.

This was GRAIL, the graphical followon to JOSS. The

first tablet (the famous RAND tablet) was invented by

Tom Ellis [Davis 1964] in order to capture human

gestures, and Gave Groner wrote a program to efficiently

recognize and respond to them [Groner 1966]. Through

everything was fastened with bubble gum and the

stem crashed often, I have never forgotton my fist interactions

with this system. It was direct manipulation, it

was analogical, it was modeless, it was beautiful. I realized

that the FLEX interface was all wrong, but how could

something like GRAIL be stuffed into such a tiny machine

since it required all of a stand-alone 360/44 to run in?

A month later, I finally visited Seymour Papert, Wally

Feurzig, Cynthia Solomon and some of the other

original researchers who had built LOGO and were using it

with children in the Lexington schools. Here were children

doing real programming with a specially designed

language and environment. As with Simula leading to

OOP, this encounter final hit me with what the destiny

of personal computing really was going to be. Not a

personal dynamic vehicle, as in Engelbart's metaphor

opposed to the IBM "railroads", but something much

more profound: a personal dynamic medium. With a

vehicle on could wait until high school and give "drivers

ed", but if it was a medium, it had to extend into

the world of childhood.

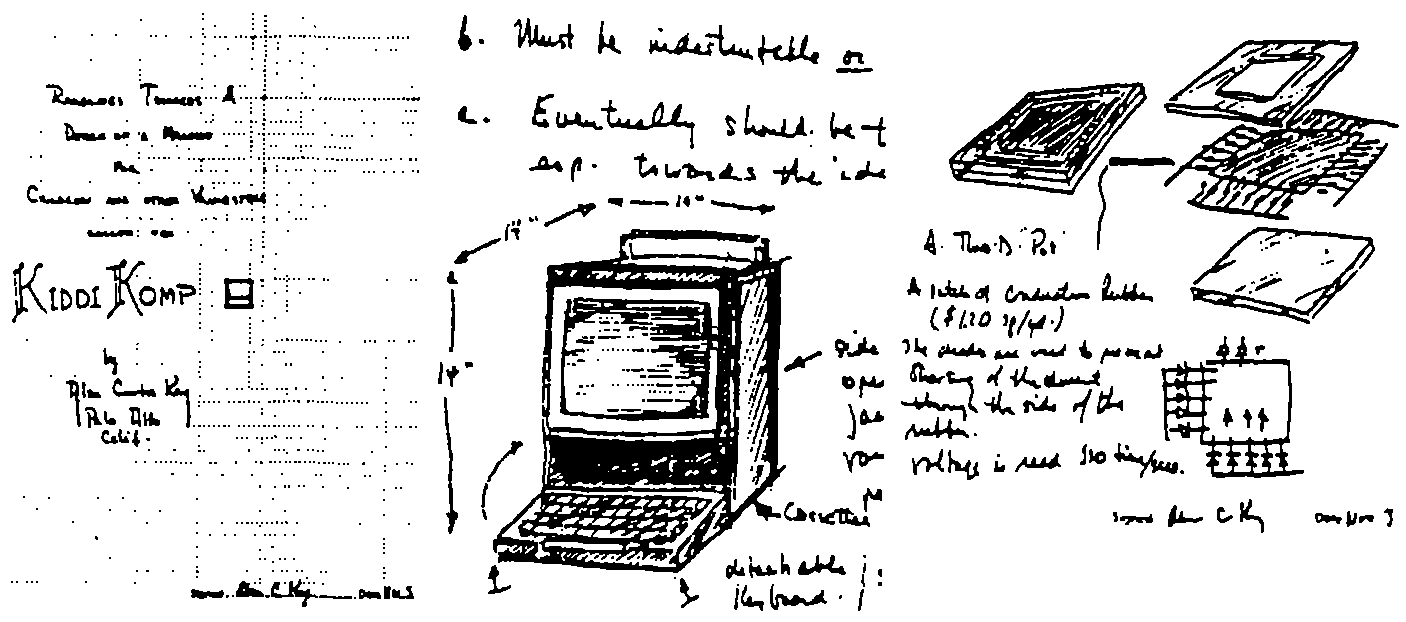

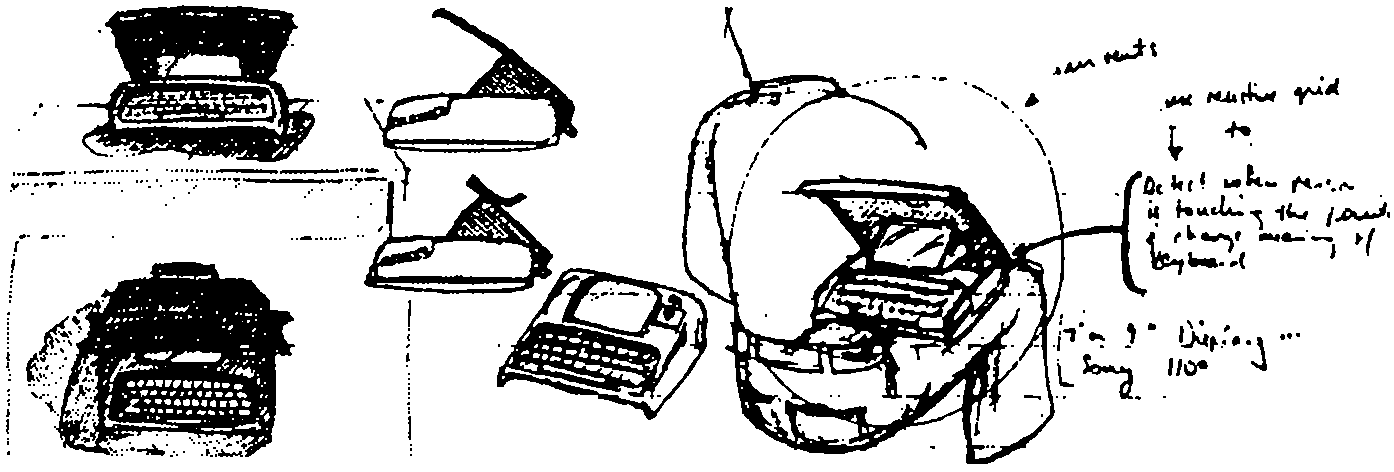

Now the collision of the FLEX machine, the flat-screen

display, GRAIL, Barton's "communications" talk,

McLuhan, and Papert's work with children all came

together to form an image of what a personal computer

really should be. I remembered Aldus Manutius who 40

years after the printing press put the book into its

modern dimensions by making it fit into saddlebags. It had

to be no larger than a notebook, and needed an interface

as friendly as JOSS', GRAIL's, and LOGO's, but with the

reach of Simula and FLEX. A clear romantic vision has a marvelous ability to focus thought and will.

Now it was easy to know what to do next. I built a cardboard model of it to see what if would look

and feel like, and poured in lead pellets to see how light it would have to be (less than two pounds). I

put a keyboard on it as well as a stylus because, even if hand printing and writing were recognized

perfectly (and there was no reason to expect that it would be), there still needed to be a balance

between

the low speed tactile degrees of freedom offered by the stylus and the more limited but faster

keyboard. Since ARPA was starting to experiment with packet radio, I expected that the Dynabook

when it arrived a decade or so hence, would have a wireless networking system.

Early next year (1969) there was a conference on Extensible Languages in which almost every

famous name in the field attended. The debate was great and weighty--it was a religious war of

unimplemented poorly though out ideas. As Alan Perlis, one of the great men in Computer Science,

put it with characteristic wit:

It has been such a long time since I have seen so many familiar faces

shouting among so many familiar ideas. Discover of something new in

programming languages, like any discovery, has somewhat the same

sequence of emotions as falling in love. A sharp elation followed by

euphoria, a feeling of uniqueness, and ultimately the wandering eye

(the urge to generalize) [ACM 69].

But it was all talk--no one had done anything yet. In the midst of all this, Ned Irons got up and

presented IMP, a system that had already been working for several years that was more elegant than

most of the nonworking proposals. The basic idea of IMP was that you could use any phrase in the

grammar as a procedure heading and write a semantic definition in terms of the language as

extended so far [Irons 1970].

I had already made the first version of the FLEX machine syntax driven, but where the meaning of a

phrase was defned in the more usual way as the kind of code that was emitted. This separated the

compiiler-extensor part of the system from the end-user. In Irons' approach, every procedure in the

system define dits own syntax in a natural and useful manner. I cinorporated these ideas into the

second verions of the FLEX machine and started to experiment with the idea of a direct interpreter rather

than a syntax directed compiler. Somewhere in all of this, I realized that the bridge to an object-based

system could be in terms of each object as a syntax directed interpreter of messages sent to it. In one

fell swoop this would unify object-oriented semantics with the ideal of a completely extensible language.

The mental image was one of separate computers sending requests to other computers that

had to be accepted and understood by the receivers beofre anything could happen. In todya's terms

every object would be a server offering services whose deployment and discretion depended entirely

on the server's notion of relationsip with the servee. As Liebniz said: "To get everything out of

nothing, you only need to find one principle." This was not well thought out enough to do the FLEX

machine any good, but formed a good point of departure for my thesis [Kay 69], which as Ivan

Sutherland liked to say was "anything you can get three people to sign."

After three people signed it (Ivan was one off them), I went to the Stanford AI project and spent

much more time thinking about notebook KiddyKomputers than AI. But there were two AI designs

that were very intriguing. The first was Carl Hewitt's PLANNER, a programmable logic system that

formed the deductive basis of Winograd's SHRDLU [Sussman 69, Hewitt 69] I designed several

languages based on a combination of the pattern matching schemes of FLEX and PLANNER [Kay 70]. The

second design was Pat Winston's concept formation system, a scheme for building semantic

networks and comparing them to form analogies and learning processes [Winston 70]. It was kind of

"object-oriented". One of its many good ieas was that the arcs of each net which served as attributes

in AOV triples should themsleves be modeled as nets. Thus, for example a first order arc called LEFT-OF

could be asked a higher order questions such as "What isyour converse?" and its net could answer:

RIGHT-OF. This point of view later formed the basis for Minsky's frame systems [Minsky 75]. A few

years later I wished I had paid more attention to this idea.

That fall, I heard a wonderful talk by Butler Lampson about CAL-TSS, a capability-based operating

system that seemed very "object-oriented" [Lampson 69]. Unfogable pointers (ala 85000) were

extended by bit-masks that restriected access to the object's internal operations. This confirmed my

"objects as server" metaphor. There was also a very nice approach to exception handling which

reminded me of the way failure was often handled in pattern matching systems. The only problem--

which the CAL designers did not wsee as a problam at all--was that only certain (usually large and

slow) things were "objects". Fast things and small things, etc., weren't. This needed to be fixed.

The biggest hit for me while at SAIL in late '69 was to really understand LISP. Of course, every

student knew about car, cdr, and cons, but Utah was impoverished in that no one there used LISP and

hence, no one had penetrated thye mysteries of eval and apply. I could hardly believe how beautiful

and wonderful the idea of LISP was [McCarthy 1960]. I say it this way because LISP had not only been

around enough to get some honest barnacles, but worse, there wee deep falws in its logical

foundations. By this, I mean that the pure language was supposed to be based on functions, but its most

important components---such as lambda expressions quotes, and conds--where not functions at all,

and insted ere called special forms. Landin and others had been able to get quotes and cons in

terms of lambda by tricks that were variously clever and useful, but the flaw remained in the jewel.

In the practical language things were better. There were not just EXPRs (which evaluated their

arguments0, but FEXPRs (which did not). My next questions was, why on earth call it a functional language?

Why not just base everuything on FEXPRs and force evaluation on the receiving side when needed? I

could never get a good answer, but the question was very helpful when it came time to invent

Smalltalk, because this started a line of thought that said "take the hardest and most profound thing

you need to do, make it great, an then build every easier thing out of it". That was the promise of

LiSP and the lure of lambda--needed was a better "hardest and most profound" thing. Objects should

be it.

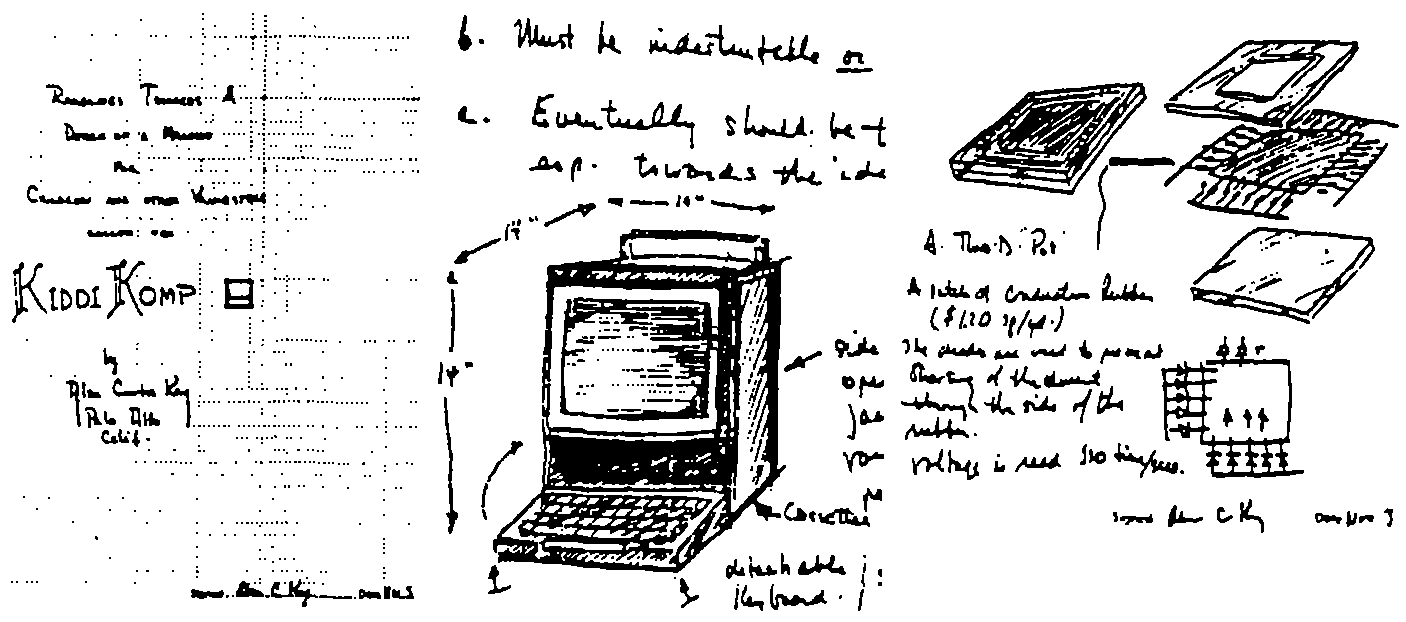

In July 1970, Xerox, at the urgin of its chief scientist Jack Goldman, decdided to set up a long range

reserach center in Palo Alo, California. In September, George Pake, the former chancellor at

Washington University where Wes Clark's ARPA project was sited, hired Bob Taylor (who had left the

ARPA office and was taling a sabbatical year at Utah) to start a "Computer Science Laboratory." Bob

visited Palo Alto and we stayed up all night talking about it. The mansfield Amendment was threatening

to blinkdly muzzle the most enlightened ARPA funding in favor of directly military reserach, and

this new opportunity looked like a promising alternative. But work for a company? He wanted me to

consult and I asked for a direction. He said: follow your instincts. I immediately started working up a

new versio of the KiddiKimp tha could be made in enough quantity to do experiments leading to

the user interface design for the eventual notebook. Bob Barton liked to say that "good ideas don't

often scale." He was certainly right when applied to the FLEX machine. The B5000 just didn't directly

scale down into a tiny machine. Only the byte-codes did. and even these needed modification. I

decided to take another look at Wes Clark's LINKX, and was ready to appreciate it much more this time

[Clark 1965].

I still liked pattern-directed approaches and OOP so I came up with a language design called "Simulation LOGO" or SLOGO for short *(I had a feeling the first versions migh run nice and slow). This

was to be built into a SONY "tummy trinitron" and ould use a coarse bit-map display and the FLEX

machine rubber tablet as a pointing device.

Another beautiful system that I had come across was Petere Deutsch's PDP-1 LISP (implemented

when he was only 15) [Deutsch 1966]. It used onl 2K (18-bit words) of code and could run quite well

in a 4K mahcine (it was its own operating system and interface). It seemed that even more could be

done if the system were byte-coded, run by an architectural that was hoospitable to dynamic systems,

and stuck into the ever larger ROMs that were becoming available. One of the basic insights I had gotten

from Seymour was that you didn't have to do a lot to make a computer an "object for thought"

for children, but what you did had to be done well and be able to apply deeply.

Right after New Years 1971, Bob Taylor scored an enourmous coup by attracting most of the

struggling Berkeley computer corp to PARC. This group included Butler Lampson, Check Thacker, Peter

Deutsch, Jim Mitchell, Dick Shoup, Willie Sue Haugeland, and Ed Fiala. Him Mitchell urged the group

to hire Ed McCreight from CM and he arrived soon after. Gar Starkweather was there already,

having been thrown out of the Xerox Rochester Labs for wanting to build a laser printer (which was

against the local religion). Not long after, many of Doug Englebart's people joined up--part of the

reason was that they want to reimplement NLS as a distributed network system, and Doug wanted to

stay with time-sharing. The group included Bill English (the co-inventor of the mouse), Jeff Rulifson,

and Bill Paxton.

Almost immediately we got into trouble with Xerox when the group decided that the new lab

needed a PDP-10 for continuity with the ARPA community. Xerox (which has bought SDS essentially

sight unseend a few years before) was horrified at the idea of their main compeititor's computer being

used in the lab. They balked. The newly formed PARC group had a metting in which it was decided

that it would take about three years to do a good operating system for the XDS SIGMA-7 but that we

could build "our own PDP-10" in a year. My reactopn was "Holy cow!" In fact, they pullit it off with

considerable pnache. MAXC was actually a microcoded emeulation of the PDP-10 that used for the first

time the new integrated chip memeoris (1K bits!) instead of core memory. Having practicalin house

experience with both of these new technologies was critical for the more radical systems to come.

One little incident of LISP eauty happened when Allen Newell visited PARC with his theory

of hierarchical thinking and was challenged to prove it. He was given a programming problem to solve

while the protocol was collected. The problem was: given a list of items, produce a list consisteing of

all of the odd indexed items followed by all of the even indexed items. Newel's internal programming

langage resembple IPL-V in which pointers are manipulated explicitly, and he got into quite a

struggle to do the program. In 2 seconds I wrote down:

oddsEvens(x) = append(odds(x), evens(x))

the statement of the problem in Landin's LISP syntax--and also the first part of the solution. Then a

few seconds later:

where odds(x) = if null(x) v null(tl(x)) then x

else hd(x) & odds(ttl(x))

evens(x) = if null(x) v null(tl(x)) then nil

else odds(tl(x))

This characteristic of writing down many solutions in declarative form and have them also be the

programs is part of the appeal and beauty of this kind of language. Watching a famous guy much

smarter than I struggle for more than 30 minutes to not quite solve the problem his way (there was a

bug) made quite an impression. It brought home to me once again that "point of view is worth 80 IQ

points." I wasn't smarter but I had a much better internal thinking tool to amplify my abilities. This

incident and others like it made paramount that any tool for children should have great thinking

patterns and deep beeauty "built-in."

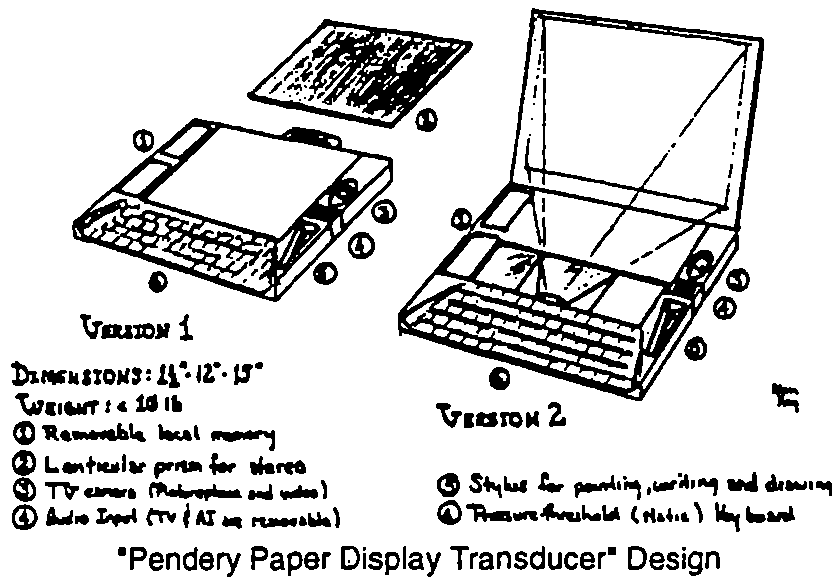

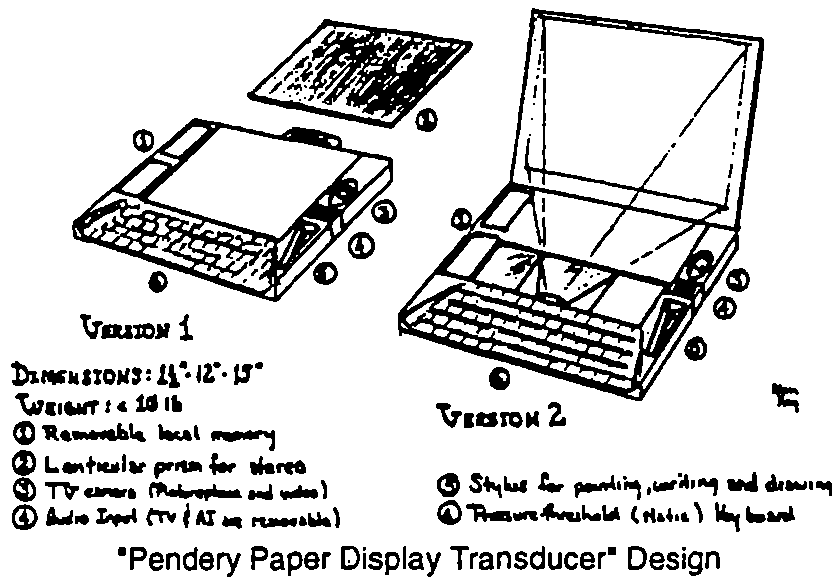

Right around this time we were involved in another conflict with Xerox management, in particular

with Don Pendery the head "planner". He really didn't understand what we were talking about and

instead was interested in "trends" and

"what was the future going to be like"

and how could Xerox "defend against

it." I got so upset I said to him, "Look.

The best way to predict the future is to

invent it. Don't worry about what all

those other people might do, this is

the century in which almost any clear vision can be made!" He remained

unconvinced, and that led to the famous "Pendery Papers for PARC

Planning Purposese," a collection of

essays on various aspects of the

future. Mine proposed a version of the notebook as a "Display Transducer."

and Jim Mitchell's was entitled "NLS

on a Minicomputer."

Bill English took me under his wing and helped me start my group as I had always been a lone

wolf and had no idea how to do it. One of his suggestions was that I should make a budget. I'm

afraid that I really did ask Bill, "What's a budget?" I remembered at Utag, in pre-Mansfield

Amendment days, Dave Evans saying to me as hwent off on a trip to ARPA, "We're almost out of

money. Got to go get some more." That seemed about right to me. They give you some money. You

spend it to find out what to do next. You run out. They give you some more. And so on. PARC never

quite made it to that idyllic standard, but for the first half decade it came close. I needed a group

because I had finally ralized that I did not have all of the temperaments required to completely finish

an idea. I called it the Learning Research Group (LRG) to be as vaue as possible bout our charter.

I only hired people that got stars in their eyes when they heard about the notebook computer idea. I

didn't like meetings: didn't believe brainstorming could substitute for cool sustained thought. When

anyone asked me what to do, and I didn't have a strong idea, I would point at the notebook nodel

and say, "Advance that." LRG members developed a very close relationship with each other--as Dan

Ingalls was to say later: "... the rest has enfolded through the love and energy of the whole Learning

Research Group." A lot of daytime was spent outside of PARC, playing tennis, bikeriding, drinking

beer, eating chinese food, and constantly talking about the Dynabook and its potential to amplify

human reach and bring new ways of thinking to a faltering civilization that desperately needed it

(that kind of goal was common in California in the afternath of the sixties).

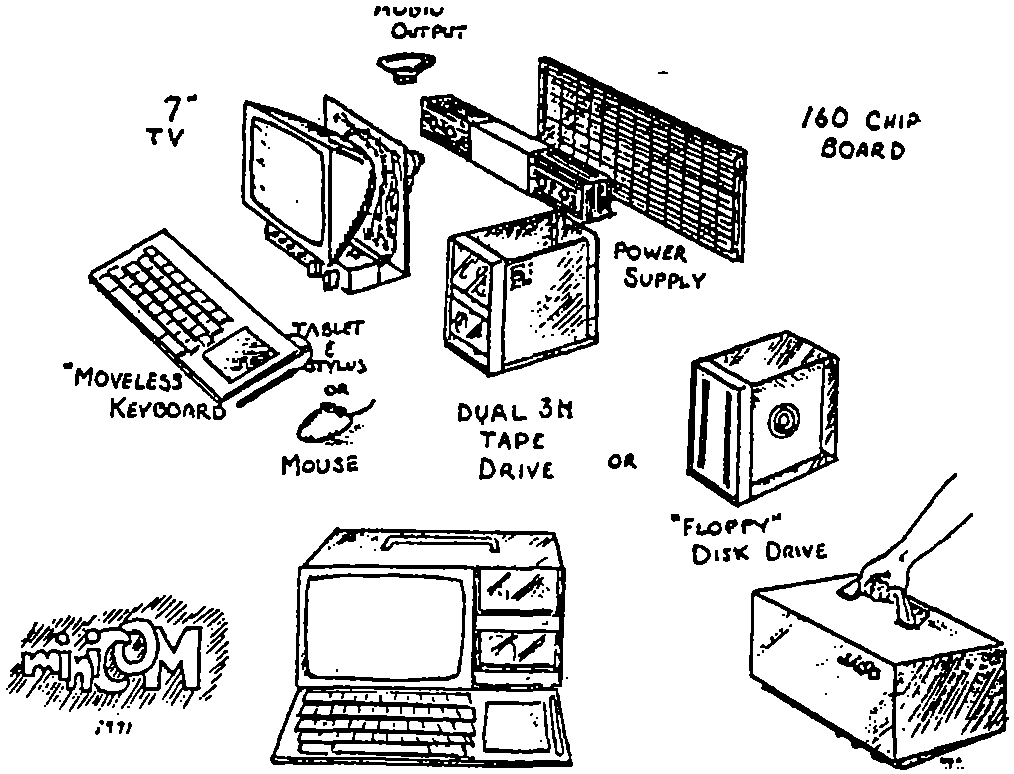

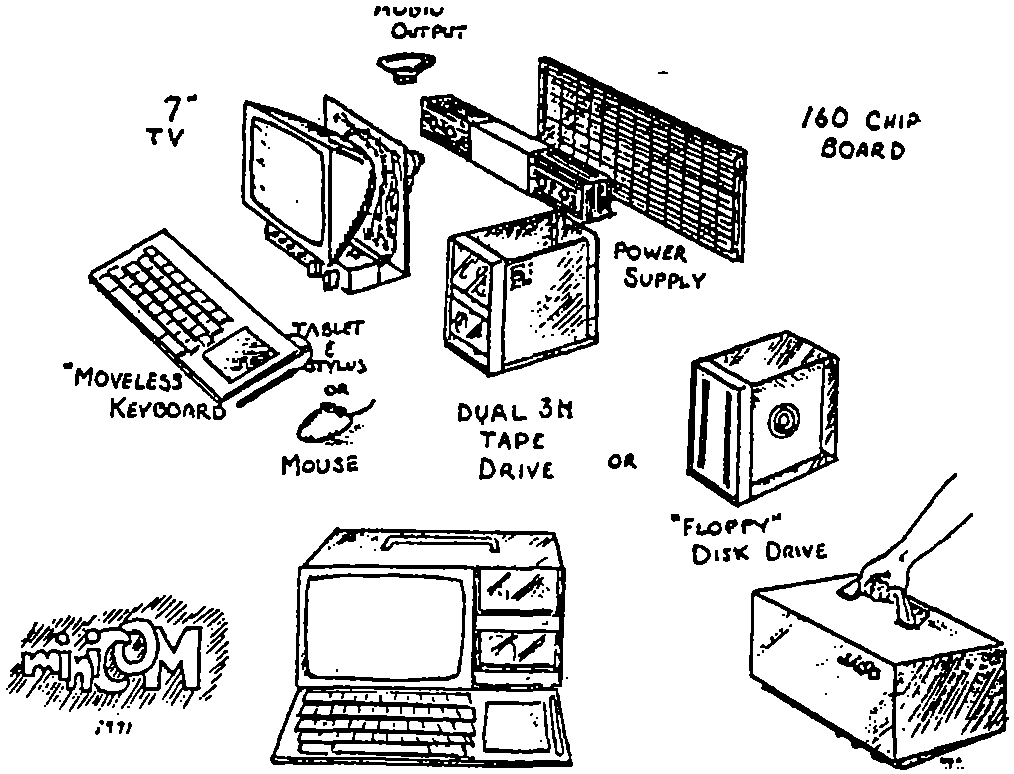

In the summer of '71 I refined the KiddiKomp idea into a tighter design called miniCOM. It used a

bit-slice approach like the NOVA 1200, had a bit-map display, a pointing device, a choice of

"secondary" (really tertiary) storages, and a language I now called "Smalltalk"--as in "programming

should be a matter of ..." and "children should program in ...". The name was also a reaction against

the "IndoEuropean god theory" where systems were named Zeus, Odin, and Thor, and hardly did

anything. I figured that "Smalltalk" was so innocuous a label that if it ever did anything nice people

would be pleasantly surprised.

This Smalltalk language (today labeled -71) was very influenced by FLEX, PLANNER, LOGO, META II, and my own

derivatives from them. It was a kind of parser with

object-attachment that executed tokens directly. (I think

the awkward quoting conventions come from META). I

was less interested in programs as algebraic patterns

than I was in a clear scheme that could handle a variety

of styles of programming. The patterned front-end

allowed simple extension, patterns as "data" to be

retrieved, a simple way to attach behaviors to objects,

and a rudimentary but clear expression of its eval in

terms that I thought children could understand after a

few years experience with simpler programming..

Program storiage was sorted into a discrimintaion net and

evalutaion was strightforward pattern-matching.

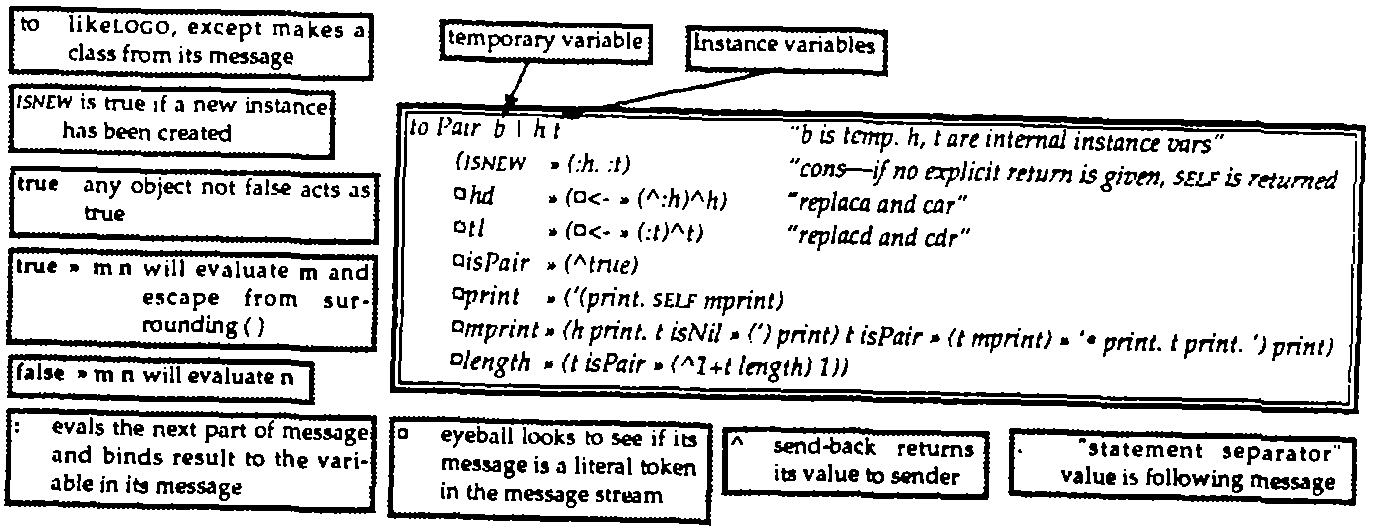

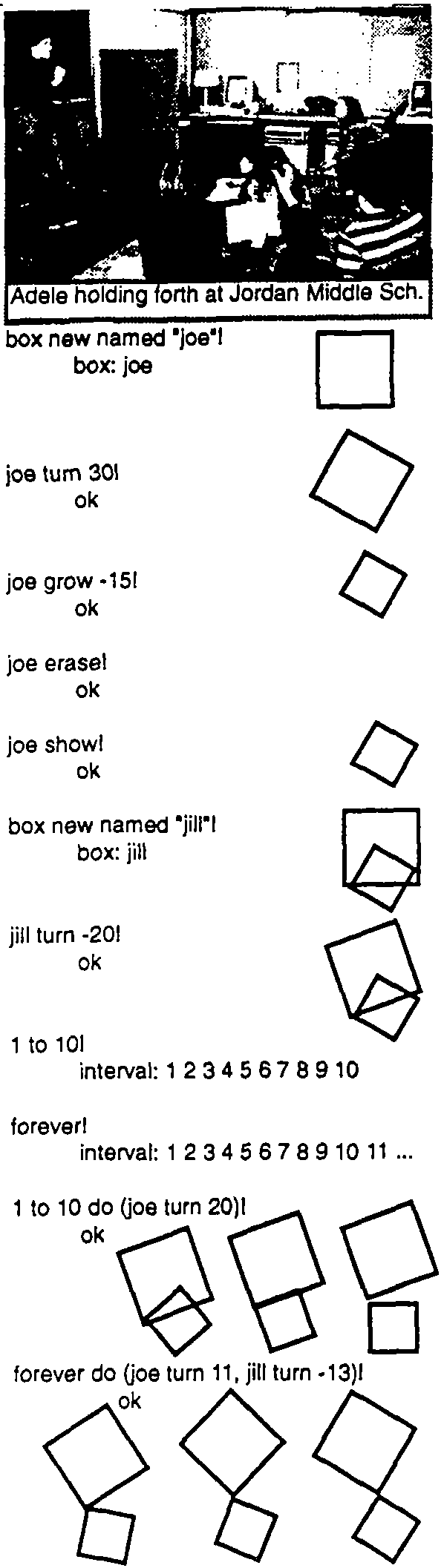

Smalltalk-71 Programs

to T 'and' :y do 'y'

to F 'and' :y do F

to 'factorial' 0 is 1

to 'factorial' :n do 'n*factorial n-1'

to 'fact' :n do 'to 'fact' n do factorial n. ^ fact n'

to :e 'is-member-of' [] do F

to :e 'is-member-of' :group

do'if e = firstof group then T

else e is-member-of rest of group'

to 'cons' :x :y is self

to 'hd' ('cons' :a :b) do 'a'

to 'hd' ('cons' :a :b) '<-' :c do 'a <- c'

to 'tl' ('cons' :a :b) do 'b'

to 'tl' ('cons' :a :b) '<-' :c do 'b <- c'

to :robot 'pickup' :block

do 'robot clear-top-of block.

robot hand move-to block.

robot hand lift block 50.

to 'height-of' block do 50'

As I mentioned previously, it was annoying that the

surface beauty of LISP was marred by some of its key

parts having to be introduced as "special forms" rather

than as its supposed universal building block of

functions. The actual beauty of LISP came more from the

promise of its metastrcutures than its actual model. I spent

a fair amount of time thinking about how objects could

be characterized as universal computers without having to have any exceptions in the central

metaphor. What seemed to be needed was complete control over what was passed in a message send;

in particular when and in what environment did expressions get evaluted?

An elegant approach was suggested in a CMU thesis of Dave Fisher [Fisher 70] on the syntheses of

control structures. ALGOL60 required a separate link for dynamic subroutine linking and for access to

static global state. Fisher showed ow a generalization of these links could be used to simulate a

wide variety of control environments. One of the ways to solve the "funarg problem" of LiSP is to

associate the proper global tate link with expressions and functions that are to be evaluted later so

that the free variables referenced are the ones that were actually implied by the static form of the

language. The notion of ";azy evaluation" is anticipated here as well.

Nowadays this approach wouldbe called reflective design. Putting it together with the FLEX models

suggested that all that should be required for "doing LISP right" or "doing OOP right" would be to

handle the mechanics of invocations between modules without having to worry about the details of

the modules themselves. The difference between LISP and OOP (or any other system) would then be

what the modules could dontain. A universal module (object) referrence --ala B5000 and LISP--and a

message holding structure--which could be virutal if the senders and receivers were sympatico--

that could be used by all would do the job.

If all of the fields of a messenger structure were enumerated according to this view, we would

have:

| GLOBAL: | the environment of the parameter values |

| SENDER: | the sender of the message |

| RECEIVER: | the receiver of the message |

| REPLY-STYLE: | wiat, fork, ...? |

| STATUS: | progress of the message |

| REPLY: | eventual result (if any) |

| OPERATION SELECTOR: | relative to the receiver |

| # OF PARAMETERS: | |

| P1: | |

| ...: | |

| Pn: | |

This is a generalization of a stack frame, such as used by the B5000, and very simiilar to what a good

intermodule scheme would require in an opeating system such as CAL-TSS--a lot of state for every

transactin, but useful to think about.

Much of the pondering during this state of grace (before any workable implementation) had to do

with trying to understand what "beautiful" ight mean with reference to object-oriented design. A

subjective definition of a beautiful thing is fairly easy but is not of much jelp: we think a thing

beautfiul because it evokes certain emotions. The cliche has it like "in the eye of the beholder" so that it is

difficult to think of beauty as other than a relation between subject and object in which the predispositions

of the subject are all important.

If there are such a thing as universally appealing forms then we can perhaps look to our shared

biological heritage for the predispositions. But, for an object like LiSP, it is almost certan that most of

the basis of our judgement is leanred and has much to do with other related areas that we think are

beautiful, such as much of mathematics.

One part of theperceived beuty of mathematics has to do with a wondrous snery between parasimony,

generality, enlightenment, and finesse. For example, the Pythagoriean Theorem is expressable

in a single line, is true for all of the infinite number of right triangles, is incredibly uiseful in

unerstanding many other relationships, and can be shown be a few simple but profound steps.

When we turn to the various languages for specifying computations we find many to be general

and a few to be parsimonious. For example, we can define universal machine languages in just a few

instructions that can speicfy anything that can be computed. But most of these we would not call

beautiful, in part because the amount and kiind of code tha has to be written to do anything interesting

is so contribed and turgid. A simple and small system that can do interesing things also needs a

"high slope"--that is a good match between the degree of interestingness and the level of complexity

needed to express it.

A fertialized egg that can transform itself into the myriad of specializations needed to make a complex

organism has parsimony, gernerality, enlightenment, and finesse-in short, beauty, and a beauty

much more in line with my own esthetics. I mean by this that Nature is wonderful both at elegance

and practicality--the cell membrane is partly there to allow useful evolutionary kludges to do their

neccessary work and still be able act as component by presenting a uniform interface to the world.

One of my continual worries at this time was about the size of the bit-map display. Even if a mixed

mode was used (between fine-grained generated characters and coarse-grained general bit-mao for

graphics) it would be hard to get enough information on the screen. It occurred to me (ina shower,

my favorite place to think) that FLEXtype windows on a bit-map displahy could be made to appear as

overlapping documents on a desktop. When an overlapped one was refreshed it would appear to

come to the top of the stack. At the time, this did not appear as the wonderful solujtion to the problem

but it did have the effect of magnifying the effective area of the display anormously, so I decided to

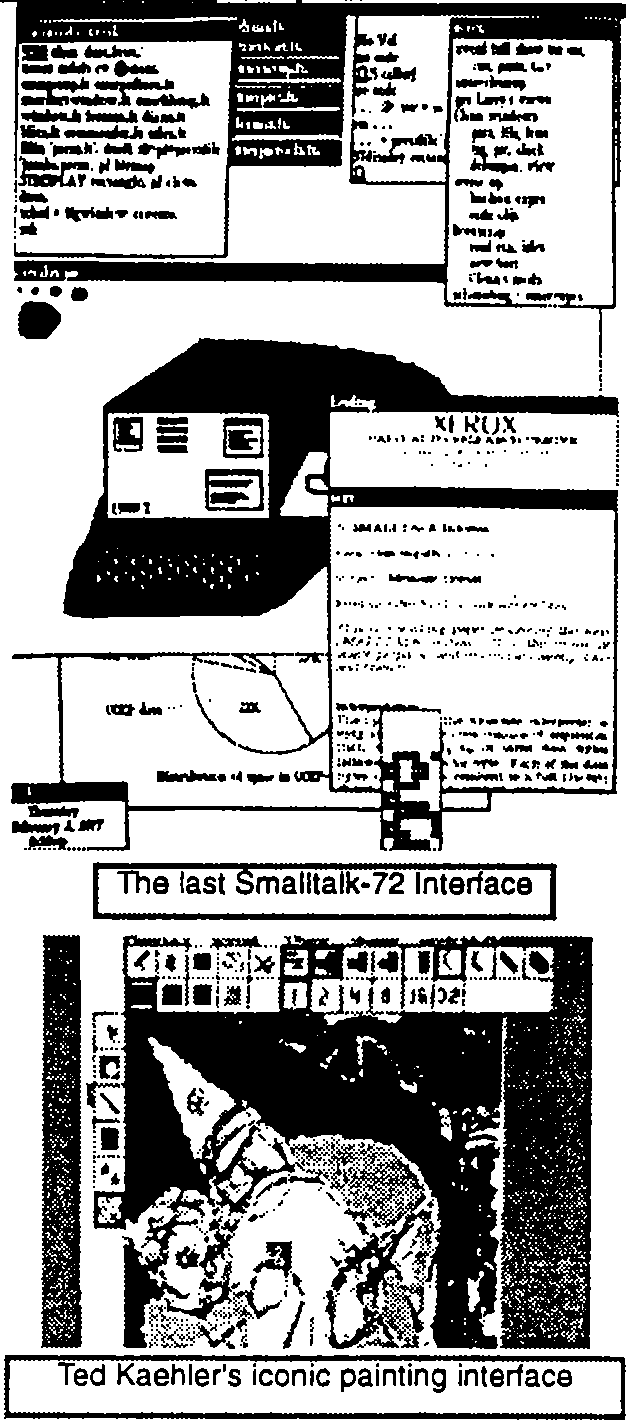

go with it.

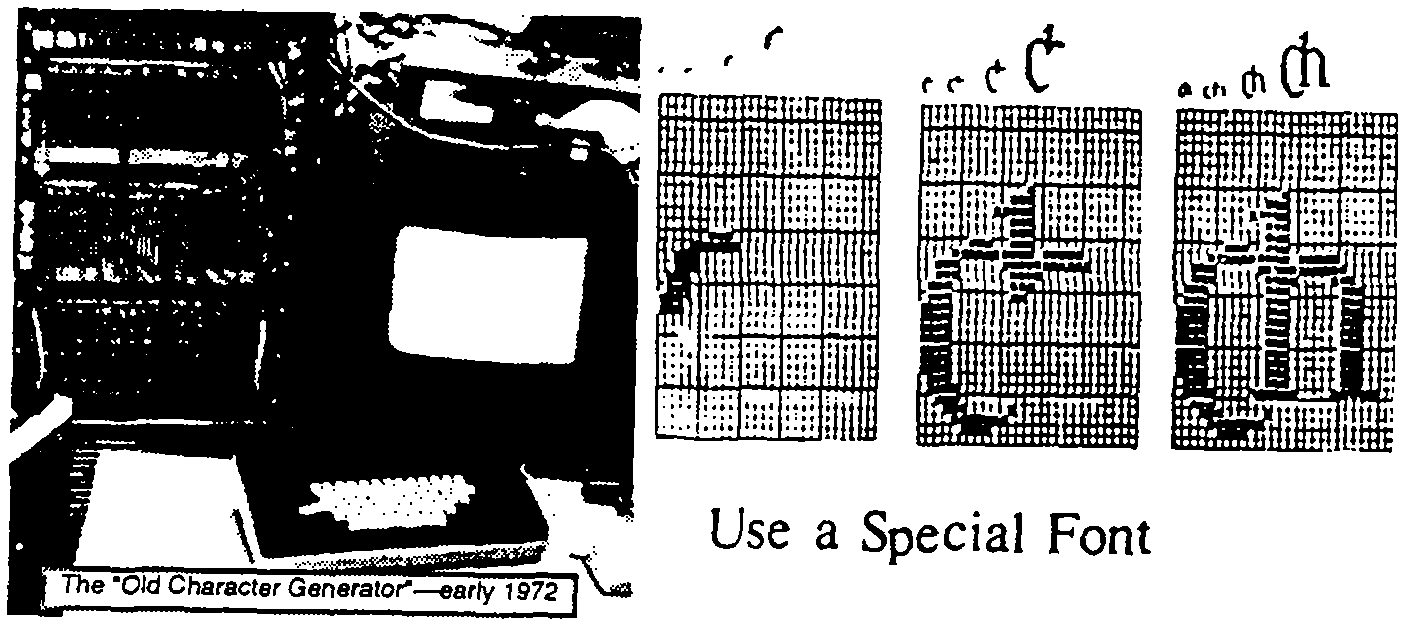

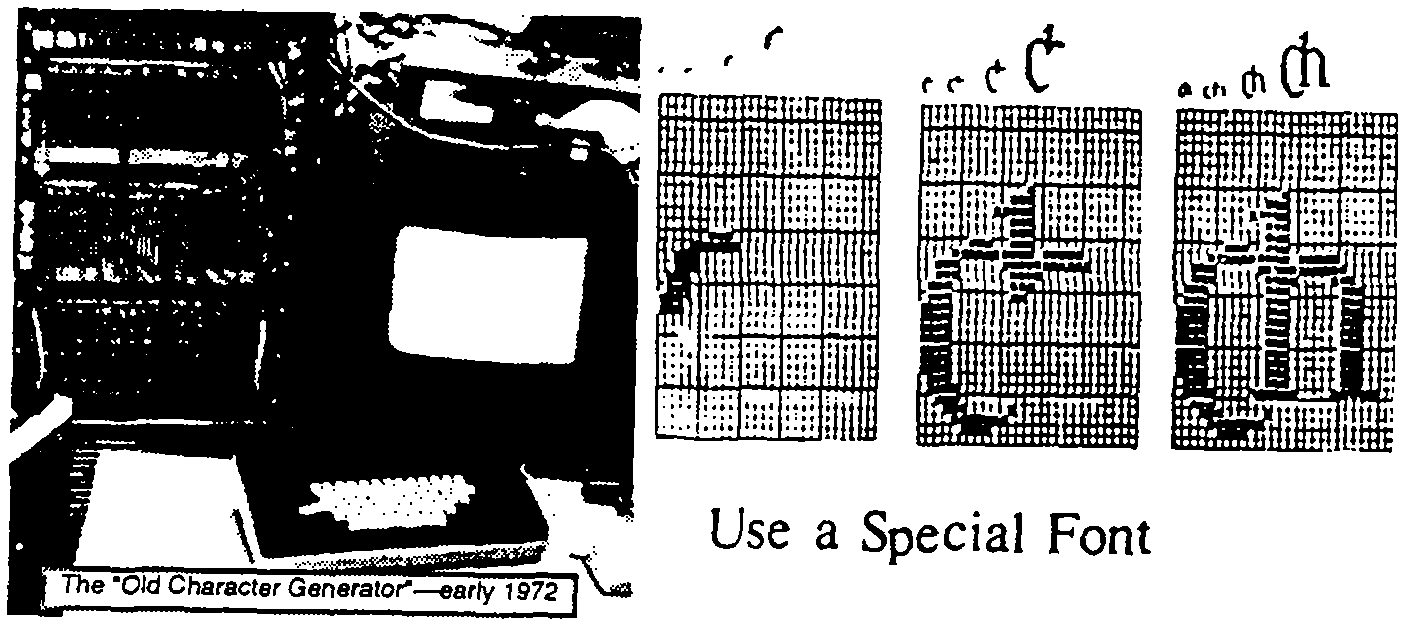

To investigate the use of video as a display medium, Bill english and Butler Lampson specified an

experimental character generator (built by Roger Bates) for the POLOS (PARC OnLine Office System)

terminals. Gary Starkweather had just gotten the first laser printer to work and we ran a coax over to

his lab to feed him some text to print. The "SLOT machine" (Scanning Laser Output Terminal) was

incredible. The only Xerox copier Gary could get to work on went at 1 page a seocond and could not

be slowed down. So Gary just made the laser run at the rate with a resolution of 500 pixels to the

inch!

The character generator's font memory turned out to be large enough to simulate a bit-map

display f one displayed a fixed "strike" and wrote into the font memory. Ben Laws built a beautiful font

editor and he and I spent several months learning about the peculaiarities of the human visual system

(it is decidedly non-linear). I was very interested in high-quality text and graphical presentations

because I thought it would be easier to get the Dynabook into schools as a "trojan horse" by simply

replacing school books rahter than to try to explain to teachers and school boards what was really

great about personal computing.

Things were generally going well all over the lab until May of 72 when I tried to get resources to

build a few miniCOMs. A relatively new executive ("X") did not want to give them to me. I wrote a

memo explaining why the system was a good idea (see Appendix II), and then had a meeting to

discuss it. "X" shot it down completely saying amoung other things that we had used too many green

stamps getting Xerox to fund the time-shared MAXC and this use of resources for personal machines

would confuse them. I was chocked. I crawled away back to the experimental character generator

and made a plan to get 4 more made and hooked to NOVAs for the initial kid experiments.

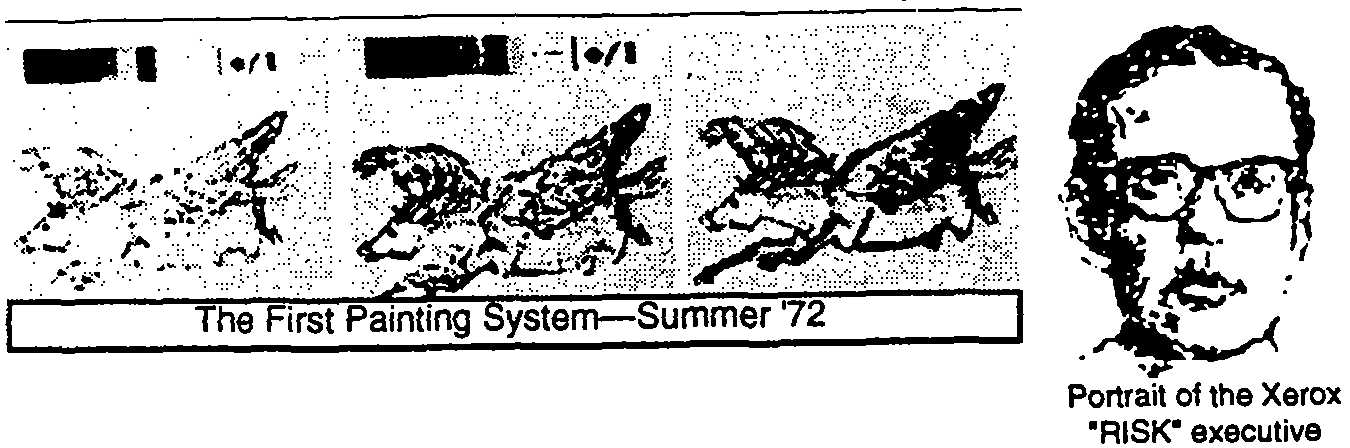

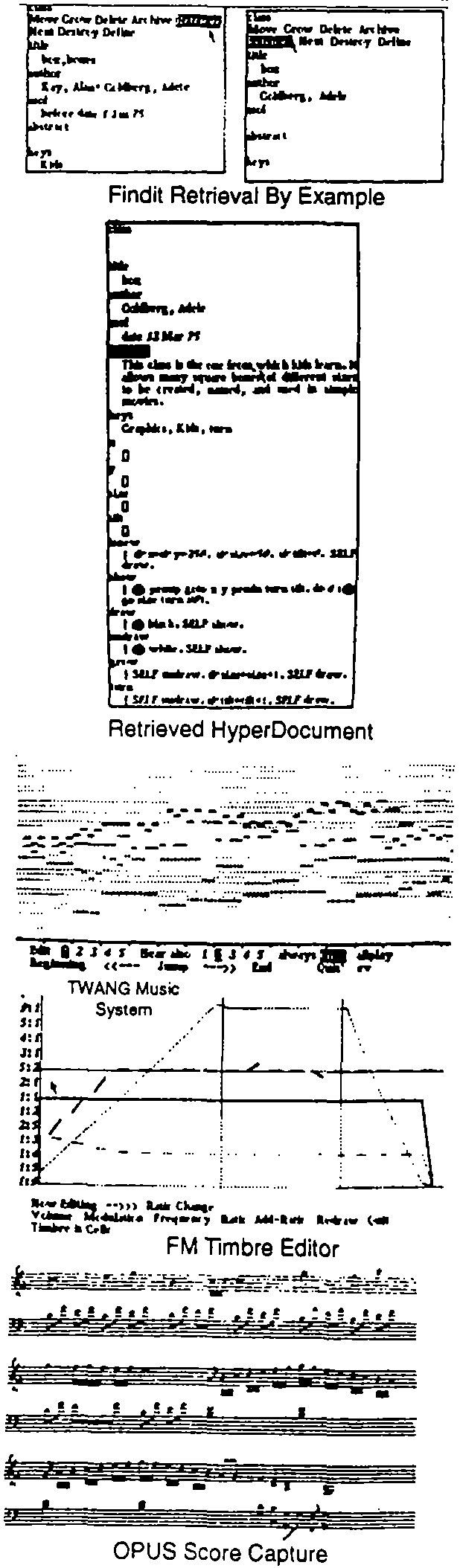

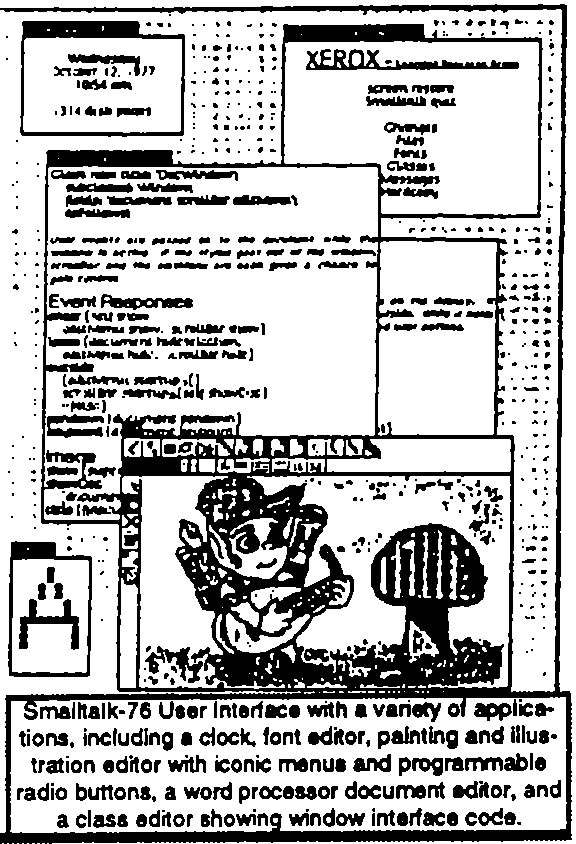

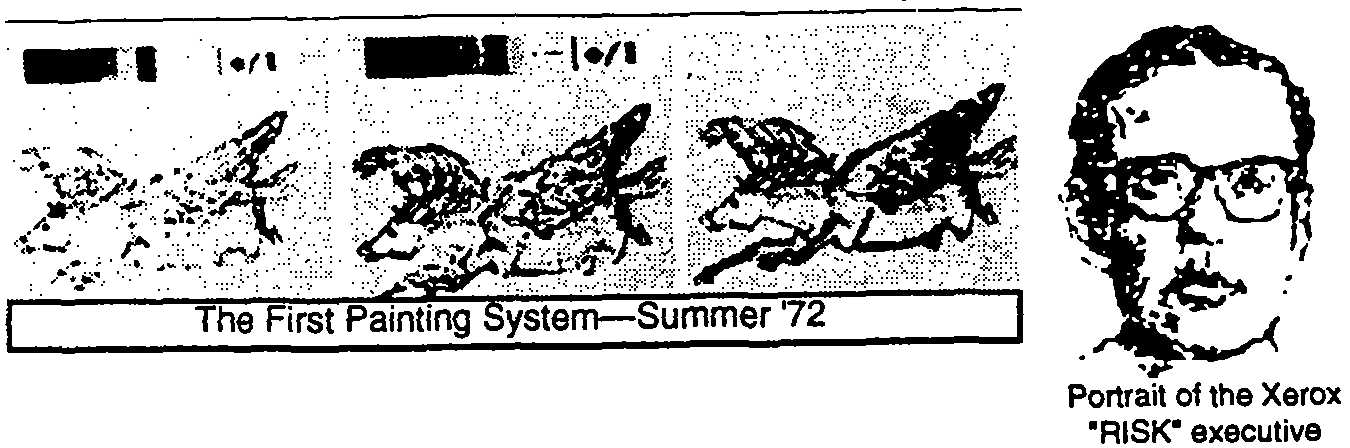

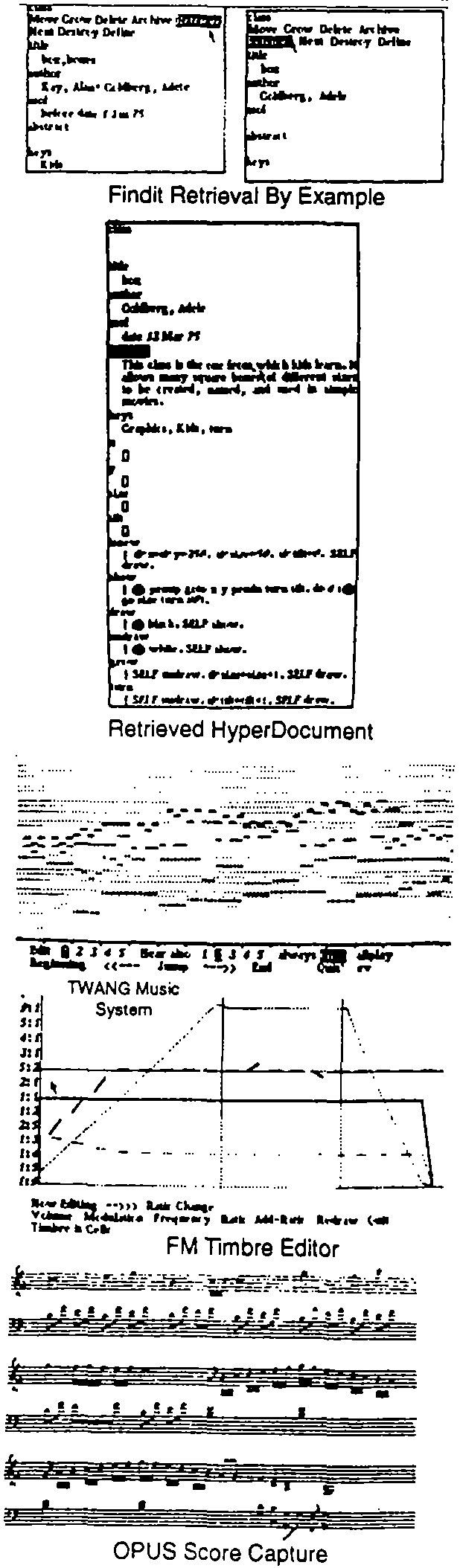

I got Steve Purcell, a summer student from Stanford, to build my design for bit-map painting so

the kids could sketch as well as display computer graphics. John Shoch built a line drawing and

gesture regognition system (based on Ledeen's [Newman and Sproull 72]) that was integrated with the

painting. Bill Duvall of POLOS built a miniNLS that was quite remarkable in its speed and power. The

first overlapping windows started to appear. Bob Shur (with Steve Purcell's help) built a 2 1/2 D

animation system. Along with Ben Laws' font editor, we could give quite a smashing demo of what we

intended to build for real over the next few years. I remember giving one of these to a Xerox

executive, including doing a protrait of him in the new painting system, and

wound it up with a flourish declaring: "And what's really great about

this is that it only has a 20% chance of success. We're taking risk just like

you asked us to!" He looked me straigt in the eye and said, "Boy, that's great, but just make sure it

works." This was a typical exeuctive notion about risk. He wanted us to be in the "20%" one hundred

percent of the time.

That summer while licking my wounds and getting the demo simnulations built and going, Butler

Lampson, Peter Deutsch, and I worked out a general scheme for emulated HLL machine languages. I

liked the B5000 scheme, but Butler did not want to have to decode bytes, and pointed out that since

an 8-bit byte had 256 total possibilities, what we should do is map different meanings onto different

parts of the "instruction space." this would give us a "poor man's Huffman code" that would be

both flexible and simple. All subsequent emulators at PARC used this general scheme.

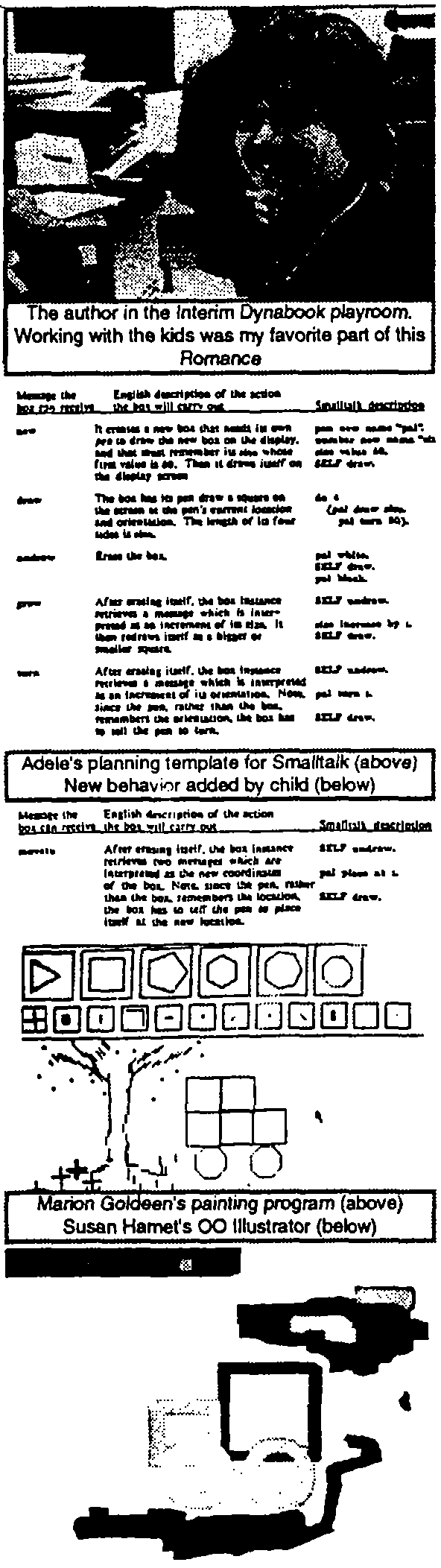

I also took another pass at the language for the kids. Jeff Rulifson was a big fan of Piaget (and

semiotics) and we had many discussions about the "stages" and what iconic thinking might be

about. After reading Piaget and especially Jerome Bruner, I was worried that the directly symbolic

approach taken by FLEX , LOGO (and the current Smalltalk) would be difficult for the kids to process

since evidence existed that the symbolic stage (or mentality) was just starting to switch on. In fact, all

of the educators that I admired (including Montessori, Holt, and Suzuki) all seemed to call for a more

figurative, more iconic approach. Rduolph Arnheim [Arnheim 69] had written a classic book about

visual thinking, and so had the eminent art critic Gomrich [Gombrich **]. It really seemed that

something better needed to be done here. GRAIL wasn't it, because its use of imagery was to portray

and edit flowcharts, which seemed like a great step backwards. But Rovner's AMBIT-G held considerably

more promise [Rovner 68]. It was kind of a visual SNOBOL [Farber 63] and the pattern matching

ideas looked like they would work for the more PLANNERlike scheme I was using.

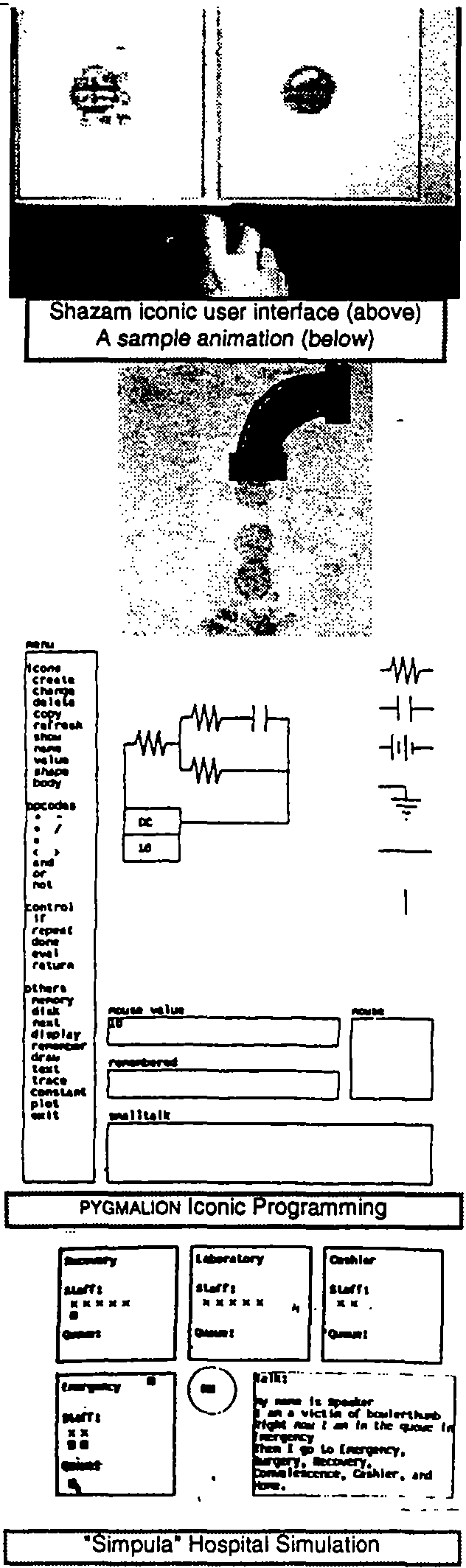

Bill English was still encouraging me to do more reasonable appearing things to get higher credibility,

likemakin budgets, writing plans and milestone notes, so I wrote a plan that proposed over

the next few years that we would build a real system on the character generators cum NOVAs that

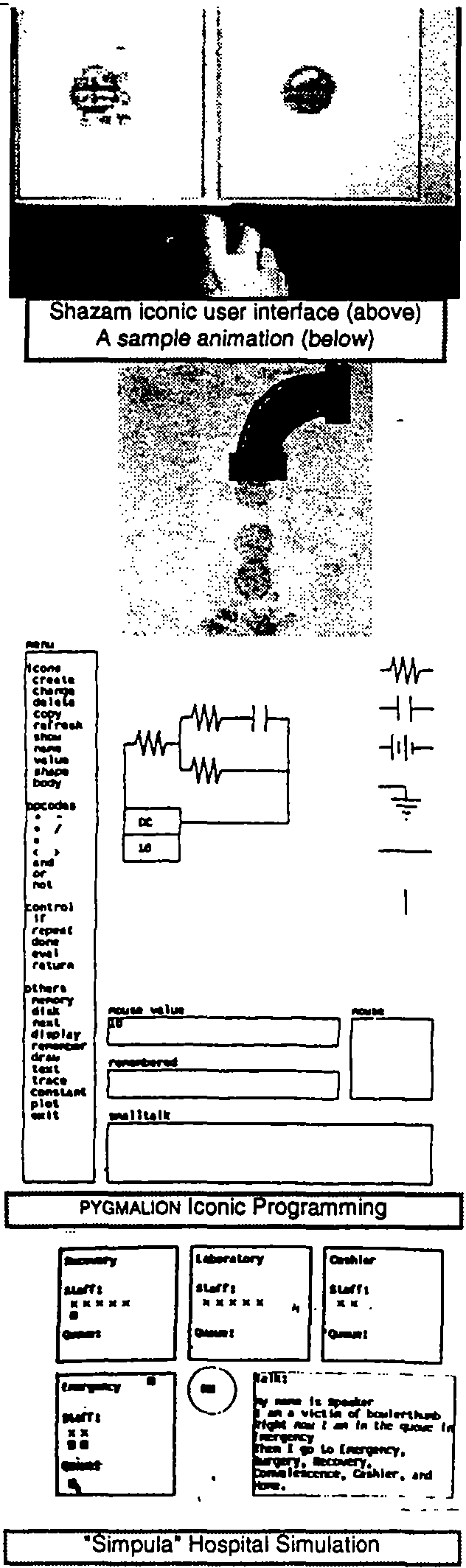

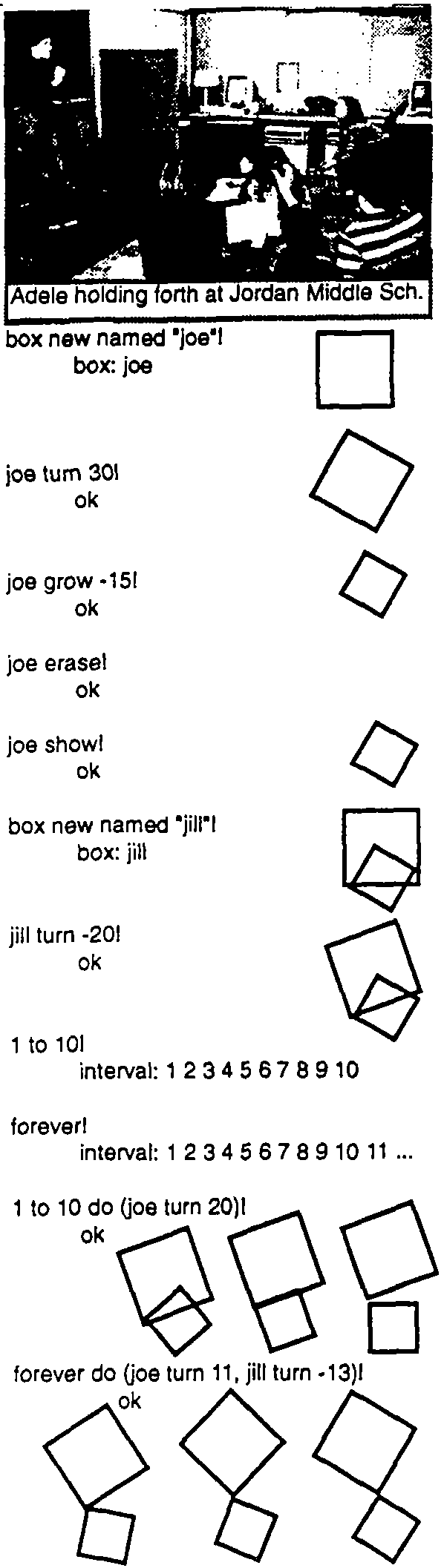

would involve OOP, windows, painting, music, animation, and "iconic programming." The latter was

deemed to be hard and would be handled by the usual method for hard problems, namely, give them

to grad students.

![Children with Dynabooks from 'A Personal Computer For Children Of All Ages [Ka 72], Iconic Bubble Sort from 1972 LRG Plan [Ka 72a]](childrenwithdynabooks.png)

In Sept. within a few weeks of each other, two bets happened that changed most of my plans. First,

Butler and Chuck came over and asked: "Do you have any money?" I said, "Yes, about $230K for

NOVAs and CGs. Why?" They said, "How would you like us to build your little machine for you?" I

said, "I'd like it fine. What is it?" Butler said: "I want a '$500 PDP-10', Chuck wants a '10 times faster

NOVA', and you want a 'kiddicomp'. What do you need on it?" I told them most of the results we had

gotten from the fonts, painting, resolution, animation, and music studies. I aksed where this had

come from all of a sudden and Butler told me that they wanted to do it anyway, that Executive "X"

was away for a few months on a "task force" so maybe they could "Sneak it in", and that Chuck had

a bet with Bill Vitic that he could do a whole machine in just 3 months. "Oh," I said.

The second bet had even more surprising results. I had expected that the new Smalltalk would be

an iconic language and would take at least two years to invent, but fate intervened. One day, in a typical

PARC hallway bullsession, Ted Kaeh;er, Dan Ingalls, and I were standing around talking about

programming languages. The subject pf power came up and the two of them wondered how large a

language one would have to make to get great power. With as much panache as I could muster, I

asserted that you could define the "most powerful language in the world" in "a page of code." They

said, "Put up or shut up."

Ted went back to CMU but Can was still around egging me on. For the next two weeks I got to

PARC every morning at four o'clock and worked on the problem until eight, when Dan, joined by

Henry Fuchs, John Shoch, and Steve Prcell shoed up to kibbitz the mroning's work.

I had orignally made the boast because McCarthy's self-describing LISP interpreter was written in

itself. It was about "a page", and as far as power goes, LISP was the whole nine-yards for functional

languages. I was quite sure I could do the same for object-oriented languages plus be able to do a

resonable syntax for the code a loa some of the FLEX machine techiques.

It turned out to be more difficult than I had first thought for three reasons. First, I wanted the

program to be more like McCarthy's second non-recursive interpreter--the one implemented as a loop

that tried to resemble the original 709 implementation of Steve Russell as much as possible. It was

more "real". Second, the intertwining of the "parsing" with message receipt--the evaluation of

paremters which was handled separately in LISP--required that my object-oriented interpreter re-enter

itself "sooner" (in fact, much sooner) than LISP required. And, finally, I was still not clear how

send and receive should work with each other.

The first few versions had flaws tha wee soundly criticized by the group. But by morning 8 or so,

a version appeared that seemed to work (see Appendix III for a sketch of how the interpreter was

designed). The major differences from the official Smalltalk-72 of a little bit later were that in the first

version symbols were byte-coded and the reeiving of return of return-values from a send was symmetric--i.e.

reciept could be like parameter binding--this was particular useful for the return of multiple values.

for various reasons, this was abandoned in favor of a more expression-oriented functional

return style.

Of course, I had gone to considerable pains to avoid doing any "real work" for the bet, but I felt I

had

proved my point. This had been an interesting holiday from our official "iconic programming"

pursuits, and I thought that would be the end of it. Much to my surprise, ionly a few ays later, Dan

Ingalls shoed me the scheme working on the NOVA. He had coded it up (in BASIC!), added a lot of

details, such as a token scanner, a list maker, etc., and there it was--running. As he liked to say: "You

just do it and it's done."

It evaluted 3 = 4 v e r y s l o w l y (it was "glacial", as Butler liked to say) but the answer alwas

came out 7. Well, there was nothing to do but keep going. Dan loved to bootstrap on a system that

"always ran," and over the next ten years he made at least 80 major releases of various flavors of

Smalltalk.

In November, I presented these ideas and a demonstration of the interpretation scheme to the MIT

AI lab. This eventuall led to Carl Hewitt's more formal "Actor" approach [Hewitt 73]. In the first

Actor paper the resemblence to Smalltalk is at its closest. The paths later diverged, partly because we

were much more interested in making things than theorizing, and partly because we had something

no one else had: Chuck Thacker's Interim Dynabook (later known as the "ALTO").

Just before Check started work on the machine I gave a paper to the National Council of Teachers

of English [Kay 72c] on the Dynabook and its potential as a learning and thinking amplifier--the

paper was an extensive rotogravure of "20 things to do with a Dynabook" [Kay 72c]. By the time I got

back from Minnesota, Stewart Brand's Rolling Stone article about PARC [Brand 1972] and the surrounding

hacker community had hit the stands. To our enormous surprise it caused a major furor at Xerox

headquarters in STamford, Connectitcut. Though it was a wonderful article that really caught the

spirit of the whole culture, Xerox went berserk, forced us to wear badges (over the years many were

printed on t-shirts), and severely restricted the kinds of publications that could be made. This was

particular disastrous for LRG, since we were the "lunatic fringe" (so-called by the other computer

scientists), were planning to go out to the schools, and needed to share our ideas (and programs)

with our colleagues such as Seymour Papert and Don Norman.

Executive "X" apparently heard some harsh words at Stamford about us, because when he

returned around Christmas and found out about the interim Dynabook, he got even more angry and

tried to kill it. Butler wound up writing a masterful defence of the machine to hold him off, and he

went back to his "task force."

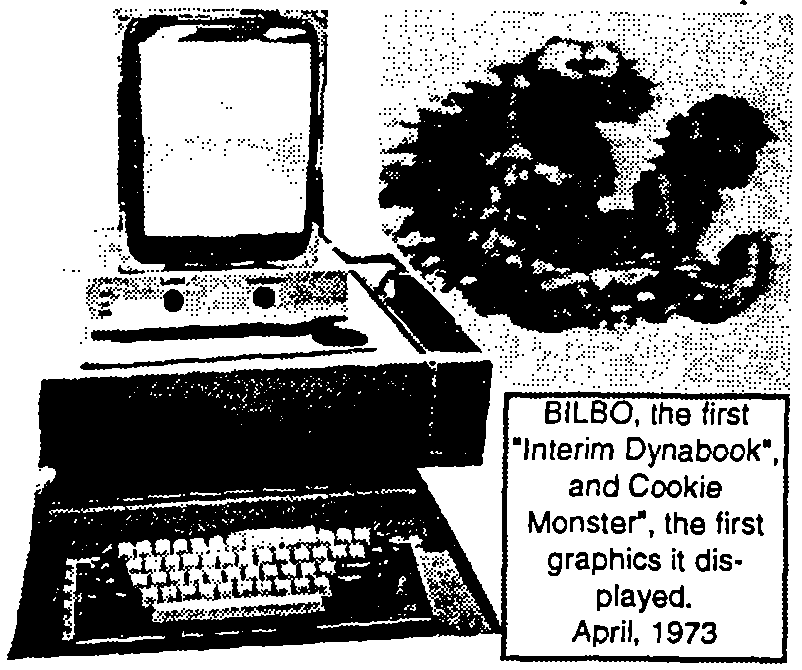

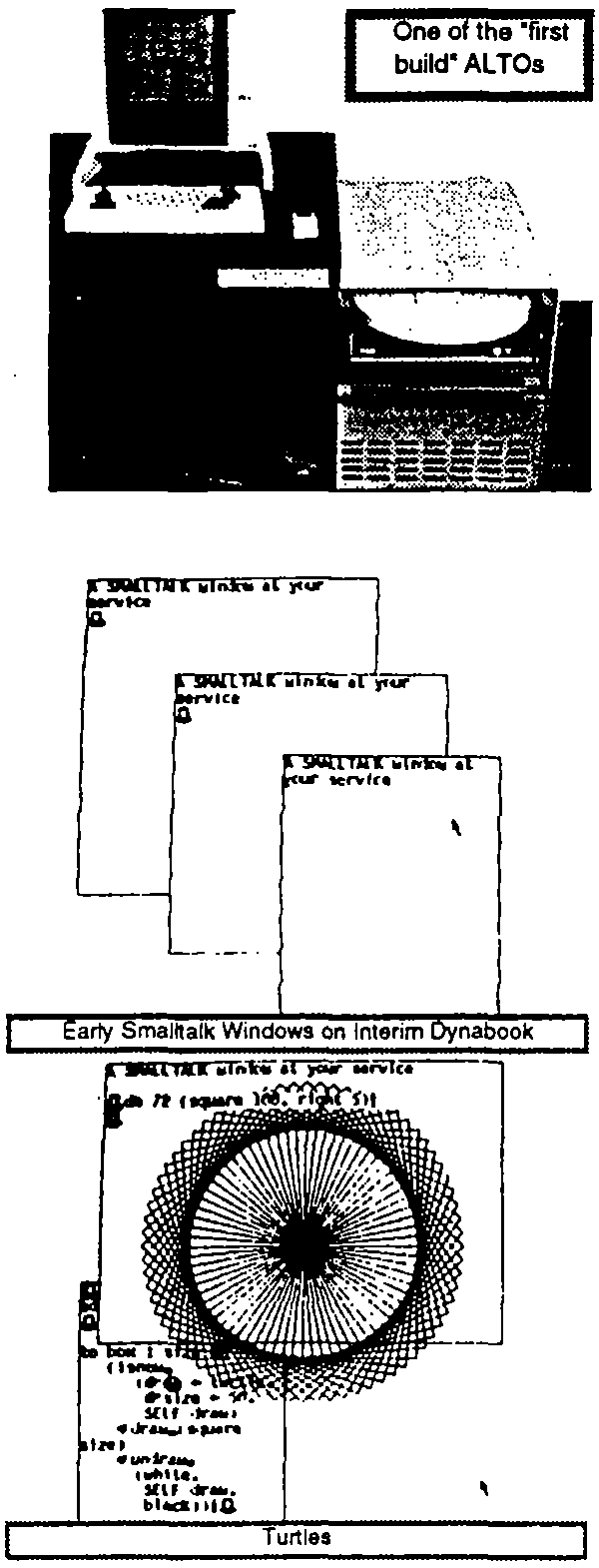

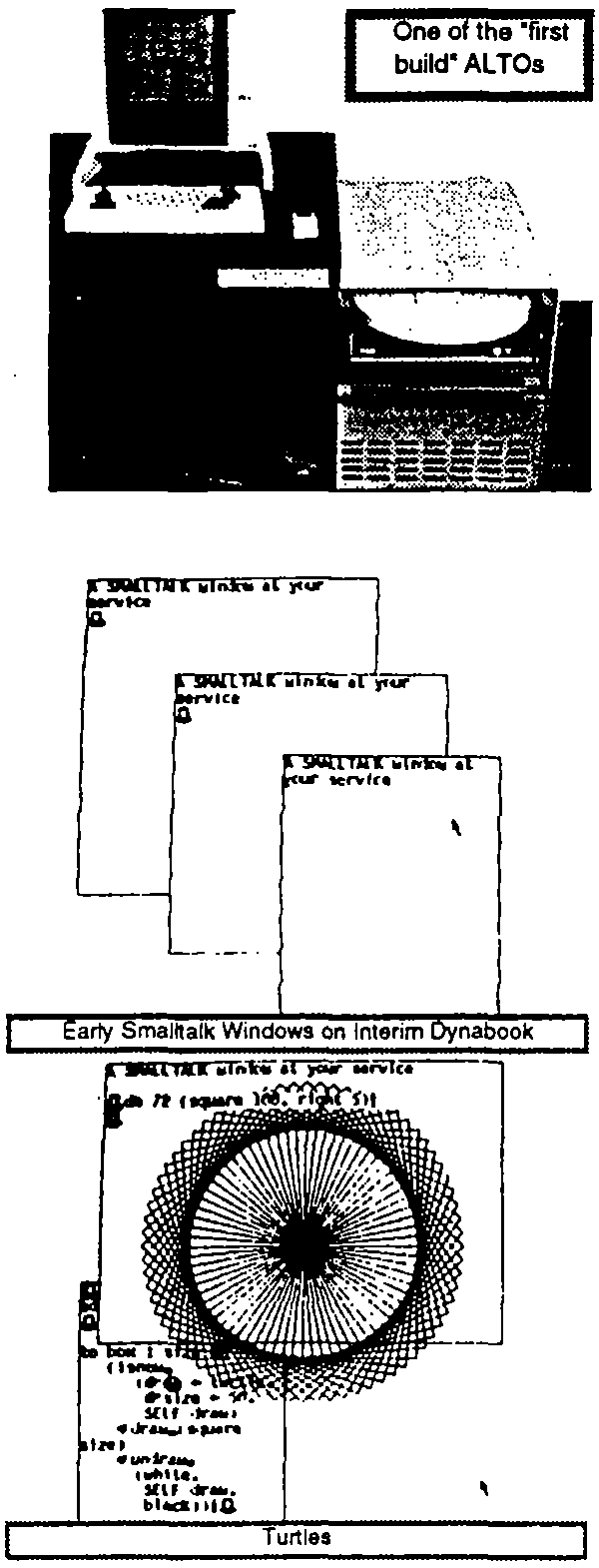

Check had started his "bet" on November 22, 1972. He and two tecnicians did all of the machine

except for the disk interface which was done by Ed McCreight. It had a ~500,000 pixel (606x808)

bitmap display, its microcode instruction rate was about 6 MIPS, it had a grand total of 128k, and the

entire machine (exclusive of the memory) ws rendered in 160 MSI chips distributed on two cards. It

ws eautiful [Thacker 1972, 1986]. One of the wonderful features of the machine was "zero-over-head"

tasking. It had 16 program counters, one for each task. Condition falgs were tied to interesting

events (such as "horizontal retrace pulse", and "disk sector pulse", etc.). Lookaside logic scanned the

flags while the current instruction was executing and picked the highes prioritity program counter to

fetch from next. The machine never had to wait, and the reuslt was that most hardware functions

(particularly those that involved i/o (like feeding the display and handling the disk) could be

replaced by microcode. Even the refresh of the MOS dynamic RAM was done by a task. In other

words, this was a coroutine architecture. Check claimed that he got the idea from a lecture I had

given on coroutines a few months before, but I remembered that Wes Clark's TX-2 (the Sketchpad

machine) had used the idea first, and I

probably mentioned that in the talk.

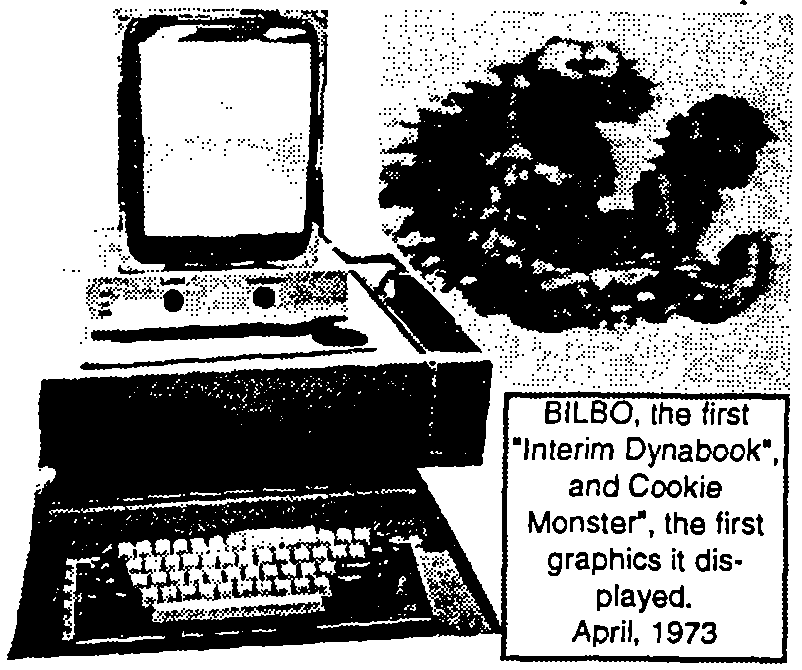

In early April, just a little over three

months fromthe start, the first Interim Dynabook, known as 'Bilbo,' greeted the

world and we had the first bit-map picture

on the screen within minutes; the Muppets' Cookie Monster that I had

sketched on our painting system.

Soon Dan had bootstrapped Smalltalk across , and for many months it was the sole software sytem to run on the

Interim dynabook. Appendix I has an

"acknowledgements" dodcument I wrote

from this time that is interesting it its

allocation of credits and the various

priorities associated with them. My $230K

was enough to get 15 of the original

projected 30 machines (over the years some 2000 Interim Dynabooks were actually built. True to

Schopenhauer's observation, Executive "X" now decided that the Interim Dynabook was a good idea

and he wanted all but two for his lab (I was in the other lab). I had to go to considerable lengths to

get our machines back, but finally succeeded.

- Everything is an object

- Objects communicate by sending and

receiving messages (in terms of objects)

- Objects have their own memory

(in terms of objects)

- Every object is an instance of a class

(which must be an object)

- The class holds the shared behavior

for its instances (in the form of objects in a pogram list

- To eval a program list, control is passed to

the first object and the remainder is treated

as its message

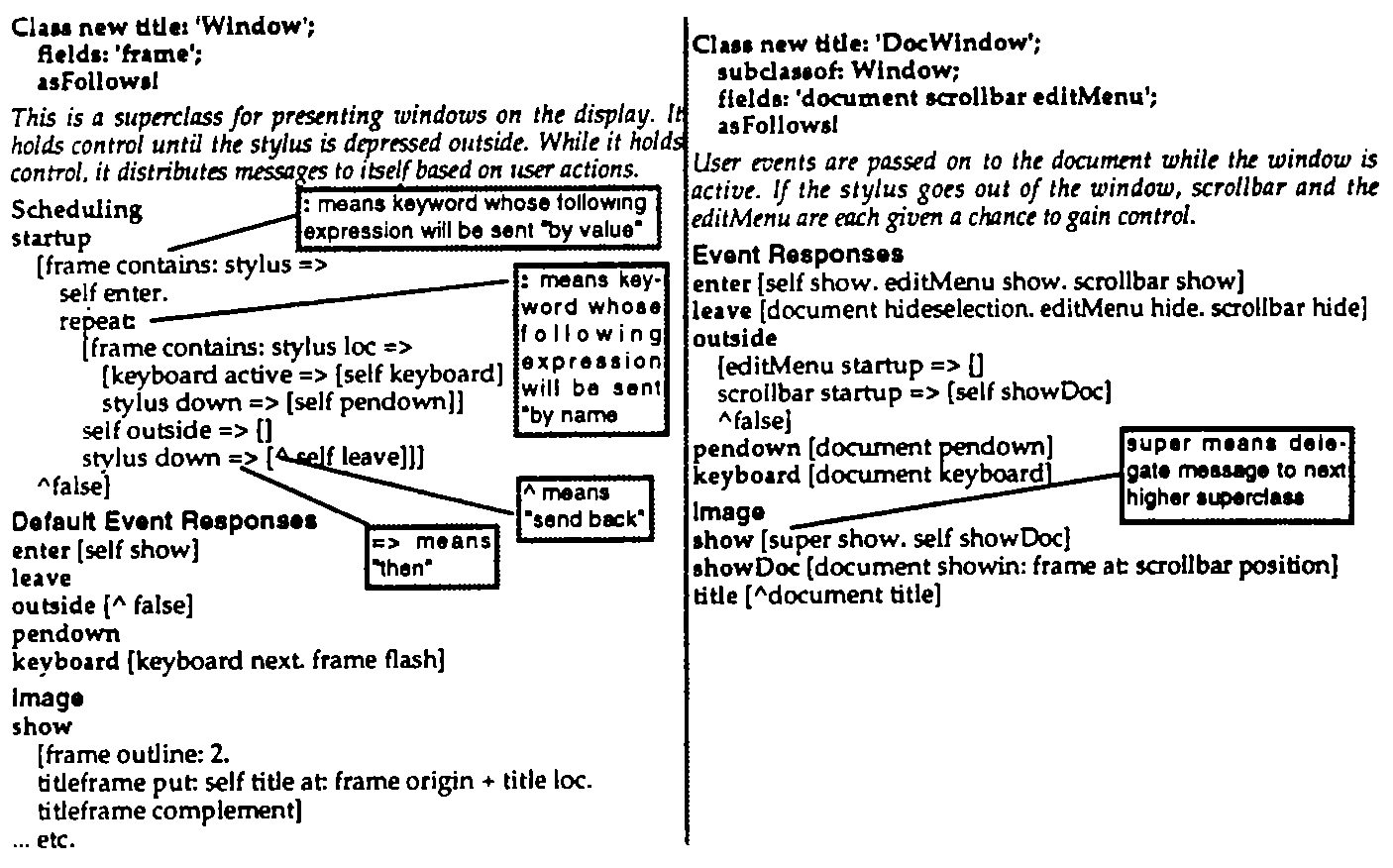

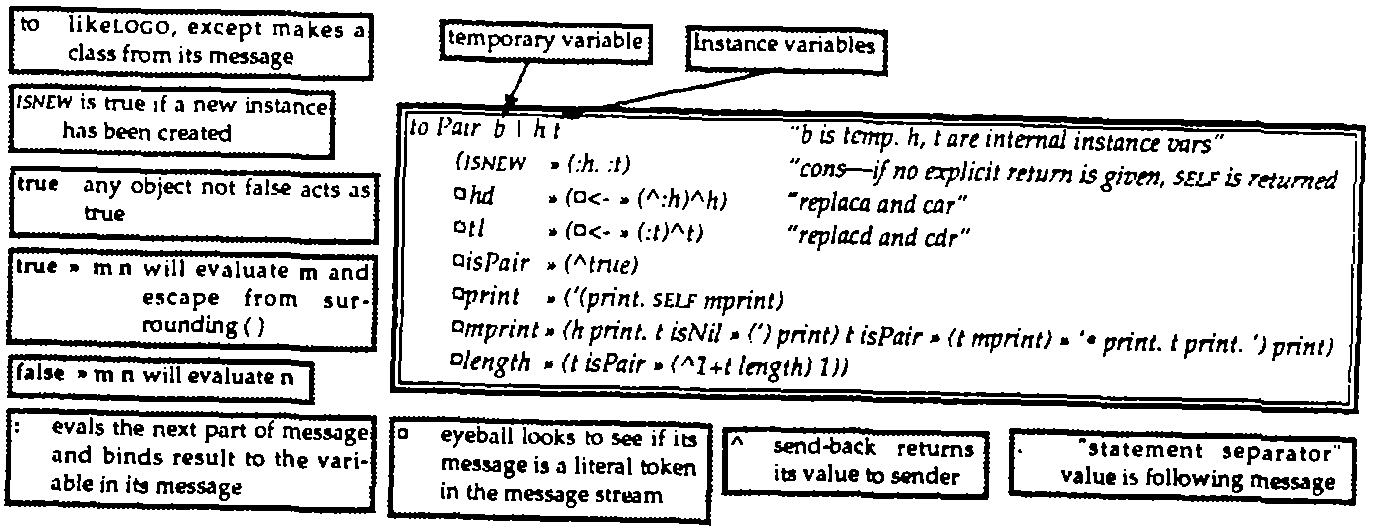

By this time most of Smalltalk's schemes had been sorted

out into six main ideas that were in accord with the initial

premises in designing the interpreter. The ist three principales

are what objects "are about"--how they are seen and

used

from "the outside." Thse did not require any modification

over the years. The last three --objects from the

inside--were tinkered with in every version of Smalltalk

(and in subsequent OOP designs). In this scheme (1 & 4)

imply that classes are objects and that they must be

instances of themself. (6) implies a LiSPlike universal syntax, but with the reeiving object as the first